|

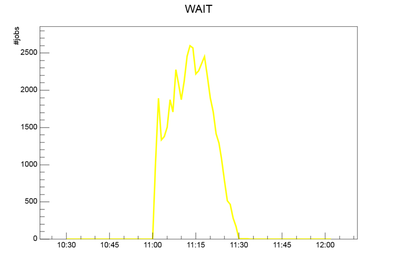

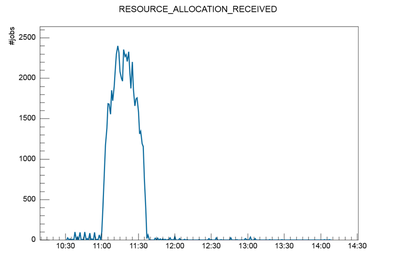

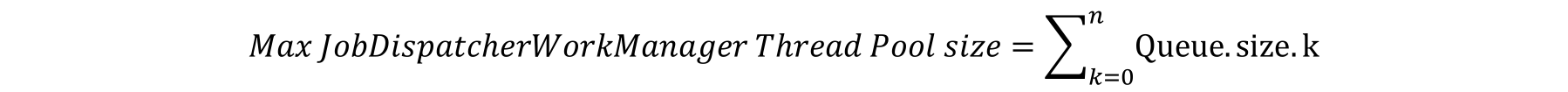

Currently Workload Automation customers are moving increasingly to dynamic agent scheduling managed by Dynamic Broker component (ITDWB enterprise application). Dynamic agent scheduling introduces additional processing to the traditional one. There are multiple processing steps between job execute notification, via mailman server, to Broker component and the final job dispatch to the remote JobManager. All these steps correspond to an internal job status in the JOB_BROKER_JOBS table in the DWB schema that map to WAIT status in the plan (which could be check using conman or the Dynamic Console). The WAIT status means, as documented, that the job is waiting to fulfill its dependencies. In this blog, it is explained how to retrieve a meaningful detailed status of Jobs. In particular, in the case of heavy workloads, it could be useful to understand the overall status of jobs grouped by internal state to monitor potential bottlenecks. It could be implemented with the following query for DB2 database: In spite of the multitudes of internal status, it is very important to monitor some of these in case of apparent internal job hanging. In this study case, the former query has been used for sampling the internal broker status during a scheduling workload scenario in addition to a ‘conman sj … ‘ sampling. During the peak, there were constantly thousands of jobs in WAIT status; that plan status was associated to internal Broker state “RESOURCE_ALLOCATION_RECEIVED”. The latter status means that all internal processing has been completed, including the logical resources assignment (cpu limit constraint had already been resolved by batchman). In this study case, the root cause of this enqueuing was found at agent side where kernel process running queue had high occupancy level causing an high service time for the request of job execution coming from Broker. Monitoring WorkManager.JobDispatcherWorkManager threads and transaction service time it could be detected that both had increased and the former was capped during the workload peak. The capping values are defined in the JobDispatcherConfig.properties, for instance the one related to the thread that submit jobs to remote JobManager: Queue.actions.4=execute Queue.size.4=30 (in this case the capping value is 30) This value could be tuned (increased) in order to have more parallel threads processing the jobs whose internal broker state is “RESOURCE_ALLOCATION_RECEIVED”. It is not advisable to increment this value If the scheduling workload is defined against a limited set of dynamic agents, causing further resource usage overhead at agent side. In any case, while changing the Queue.size.# value, the following formula must be verified: While running the above explained query over time it could be verified that the number of jobs in final status could change accordingly with the cleanup policy in the JobDispatcherConfig.properties: Pier is a Software Engineer with long standing experience in software performance discipline. Pier has a degree in Physics and is currently based in the HCL Products and Platforms Rome software development laboratory.

0 Comments

Your comment will be posted after it is approved.

Leave a Reply. |

Archives

July 2024

Categories

All

|

RSS Feed

RSS Feed