|

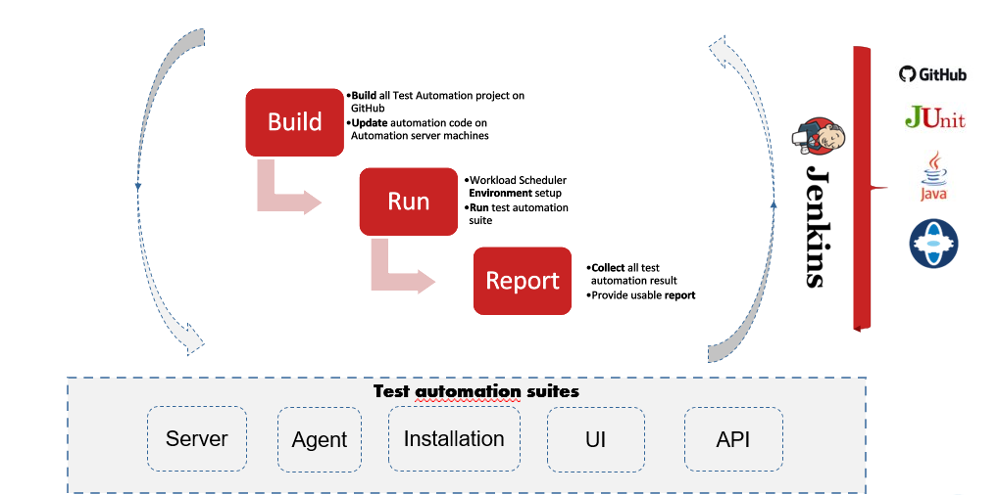

Automate anything, Run Anywhere is our motto! What better place to start making it real than from our very own Workload Automation test environment? We have been applying it in our Test Lab for Workload Automation version 9.5 since its general availability in 2019, and to all the subsequent fix packs, including the last one, fix pack 3. Let’s start discovering how we can leverage a Workload Automation deployment to run automation jobs that build our code and prepare packages for both our HCL and IBM brand offerings. The automation jobs trigger several automation suites running for each brand. Every day, we run about 20,000 automated test scenarios that cover all the Workload Automation components (Dynamic Workload Console, master domain manager and its backup, the Rest APIs layer, dynamic agents and fault-tolerant agents). Rest assured that we always uphold backward compatibility and detect instability issues before, rather than later! The main goal for us is to prevent injecting defects and instability to the base functionalities used by the most part of our customers. Let’s take a deep dive into the automation world! How many test automation suites? Fig. 1 - Automation suites daily flow The Workload Automation solution includes the following test automation suites:

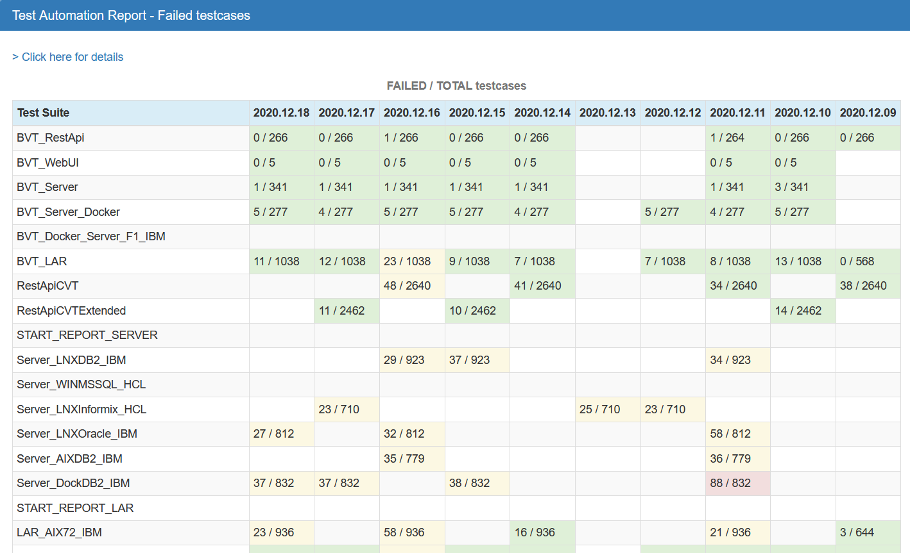

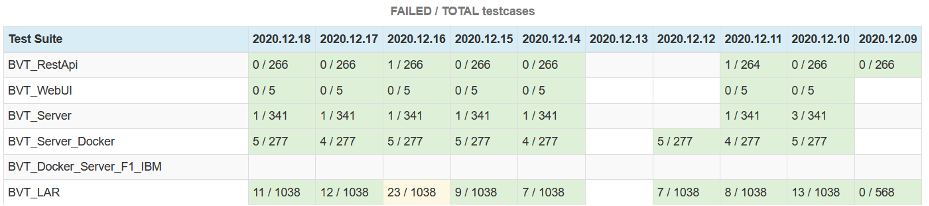

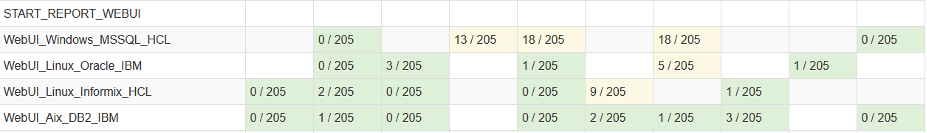

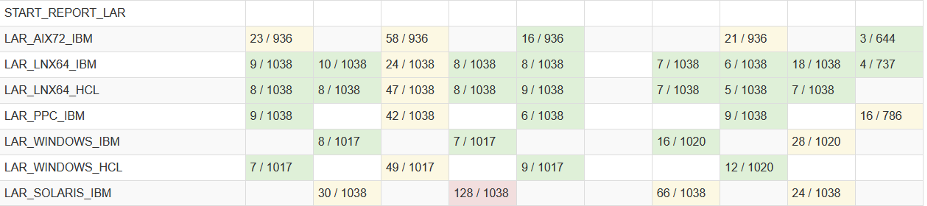

The latest results of the automation suites are available on our Jenkins site and they are gathered in the Aggregate Test Report. The Aggregate Test Report is a giant matrix where each row represents a Jenkins job that groups a list of test scenarios belonging to a specific suite and the columns correspond to the date when the Jenkins job runs. The color of each cell of the Aggregate Test Report is updated each time the Jenkins job completes its daily or weekly run. The color indicates the percentage of failed test cases with respect to the total number of them. The matrix has continually evolved since the test phase of the version 9.5 General Availability through until the test phase of the latest fix pack. Fig. 2 - Extract of the Aggregate Test Report Let’s figure out the number of the scenarios that automatically run every day for each automation suite. BVT automation suites BVT suites

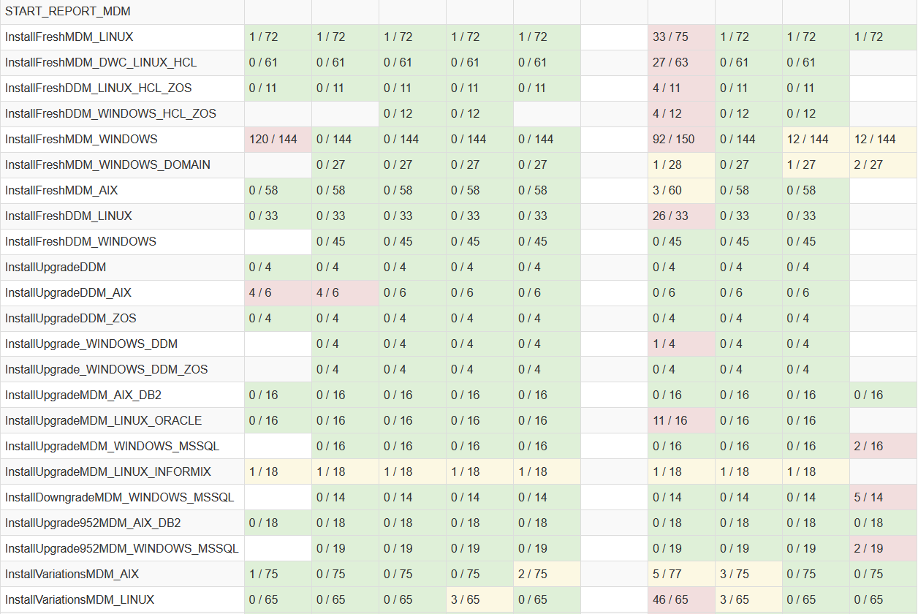

Fig. 3 - The BVT Aggregate Test Report section Installation and upgrade automation suites Installation and Upgrade

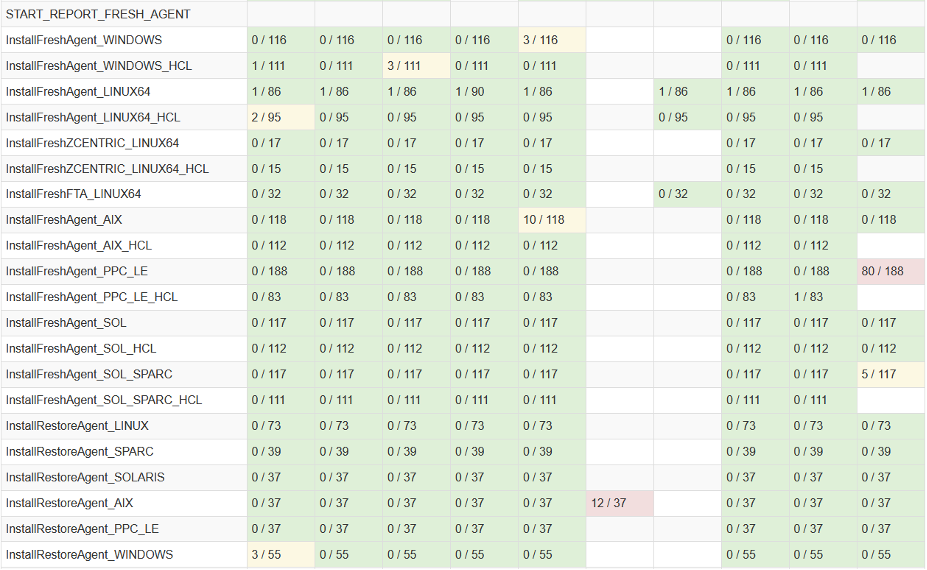

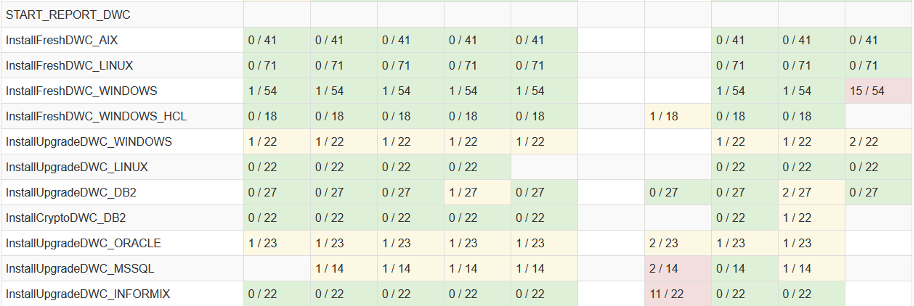

Fig. 4 - The agent and Z Workload Automation agent (z-centric) Aggregate Test Report section Fig. 5 - The Server installation and upgrade Aggregate Test Report section Fig. 6 - The console installation and upgrade Aggregate Test Report section REST APIs automation suite Rest APIs suites

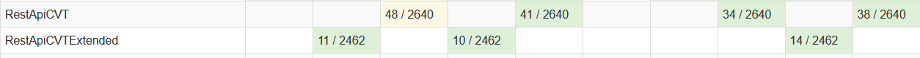

Fig. 7 - The Rest APIs Aggregate Test Report section Server Automation Suite Server suites

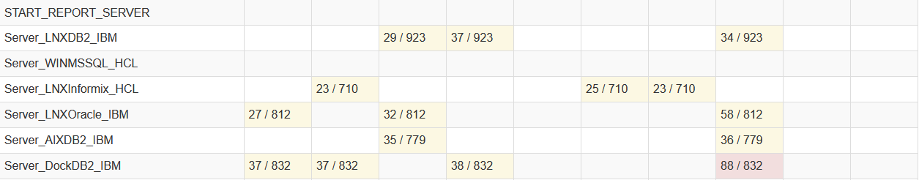

Fig. 8 - The server Aggregate Test Report section Dynamic Workload Console automation suite Console Automation suite

Fig. 9 - The Console Aggregate Test Report section Dynamic agent automation suite Dynamic Agents automation suites (aka LAR)

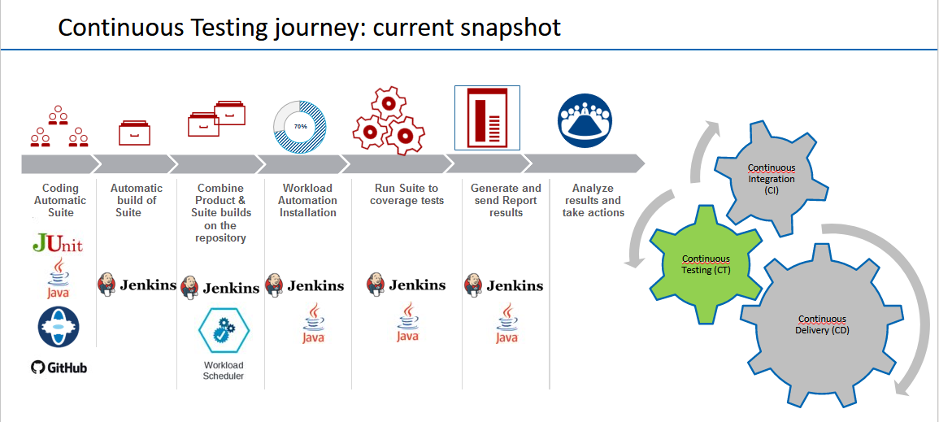

Fig. 10 - The agent suites Aggregate Test Report section What is the flow of the daily WA test automation suites? Fig. 11 – Detailed Continuous testing journey Every day, when a new product build is available, the automatic Jenkins workflow starts and performs the following steps:

During the development phase (when the new product features are developed), the test automation suites are enriched to also cover the features that are being developed over time. The main objective of test automation during this phase is to increase the test coverage to include the new functionalities that are being added in the product version under test. During the formal System Verification Test (SVT) phase, the new test automation test cases, that were created during the development phase, are merged into the pre-existing test automation suites. The daily runs of these enriched test automation suites check the stability of the code, gain greater coverage as new tests are added to the regression package, and reduce test time compared to manual testing. The main objective of test automation during this phase is to demonstrate the stability of the product and to prevent potential backward compatibility issues affecting ideally the base features. At the end of this journey, there is specific success criteria that must be met to consider the quality of the product good enough to be released on the market and that is, the single test automation suites must have a success rate that is higher than the results obtained for the previous release: At the end of the Workload Automation 9.5 Fix Pack 3 development cycle, the success criteria were satisfied with the following values:

Moreover, the test automation framework described in this article allowed the Workload Automation team to find and fix around 240 defects during the 9.5 Fix Pack 3 development cycle. We hope this article is helpful in understanding the ecosystem in which the WA solution shapes up and evolves to satisfy our customer needs. Learn more about Workload Automation here and get in touch with us writing at [email protected]. Authors' BIO

0 Comments

Your comment will be posted after it is approved.

Leave a Reply. |

Archives

July 2024

Categories

All

|

RSS Feed

RSS Feed