|

Italy’s lockdown was declared on March 9th, 2020, in order to contain the spread of the covid19 – aka the corona virus – in our country. The heart of HCL Workload Automation historically beats at the Rome Lab: product managers, developers, sales share space and ideas every day to write the future of automation. HCL Rome Lab - Click to see video Until the lockdown. We moved our work and social interactions on virtual channels, and we started working remotely (actually, we are good at that, since we have always adopted a blended approach, letting people work from home when needed so on this front we were definitely prepared). But what happens when automation experts are confined at home in the middle of a pandemic crisis? Here is where our journal from the #italylockdown took an unexpected path. FIGHTING THE VIRUS, WITH DATA During a crisis there are two things which can never go out of sight:

How did it translate for us? On March 7th, the Civil Protection started making data about the spread of the virus in Italy available in open format on GitHub. Dataset includes:

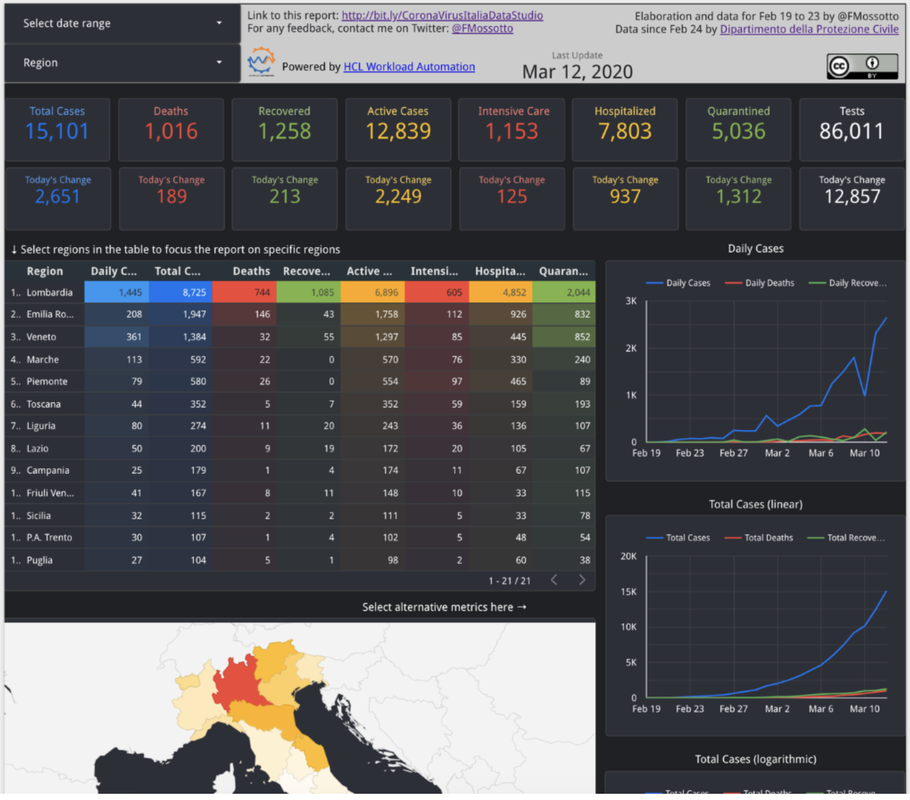

Few minutes after the conference, data are published on GitHub and published on an institutional dashboard, reporting basic data. But what if I want to access the complete set of data in a clear, easy to understand way, that allows to understand trends, situation in specific regions and have the data always available? Combining the power of HCL Workload Automation with these data and Google Data Studio dashboards was the natural next step. AUTOMATION ENHANCED DATA ANALYSIS A few hours after data were made available by the Civil Protection, we created an analysis dashboard to let everybody follow and track the impact of the corona virus in Italy: click here. MAKE SENSE FROM DATA We wanted to answer three main questions:

Google Data Studio was perfect for this scope. AUTOMATED DATA ANALYSIS Second need: Getting the data form the official source, integrate the data, elaborate the data and publish them requires different steps and different tools, people come on the dashboard right after 6 PM CET to check how the trends are changing, if we want this to be efficient, we need to put wings on it with an automation tool. That’s exactly our expertise: HCL Workload Automation was our most powerful ally on the field. As we were saying the process of publishing data is made of several steps:

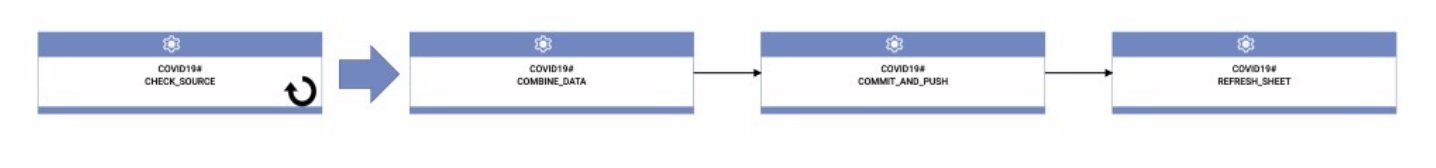

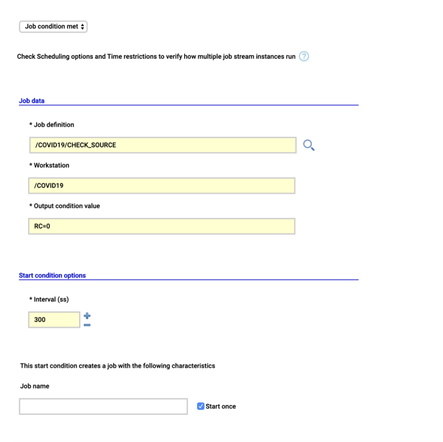

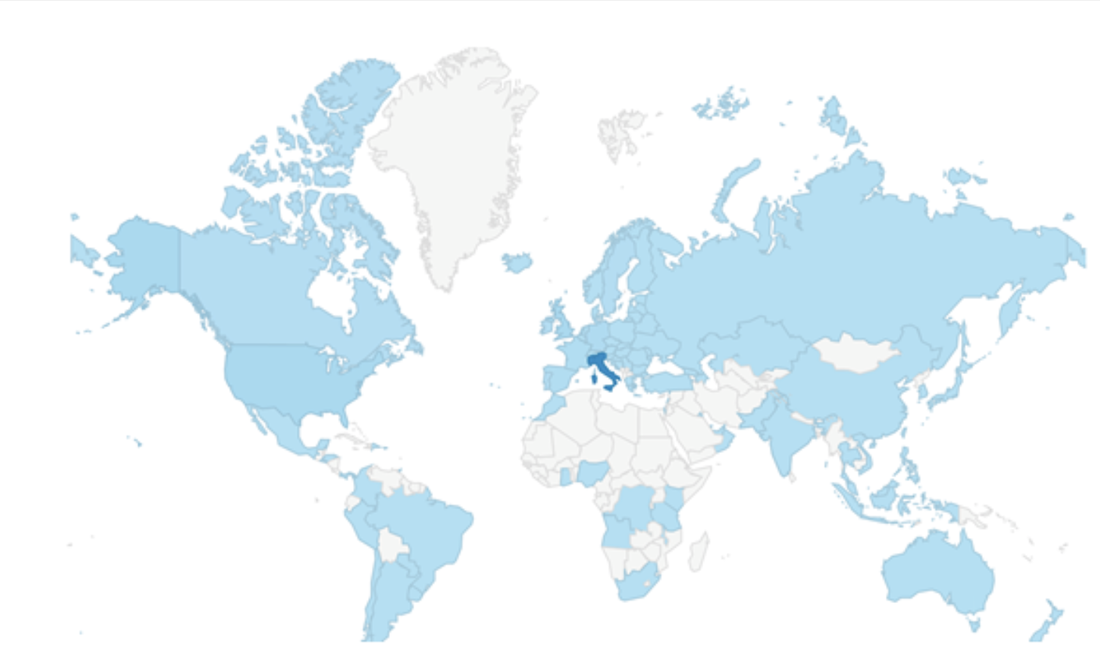

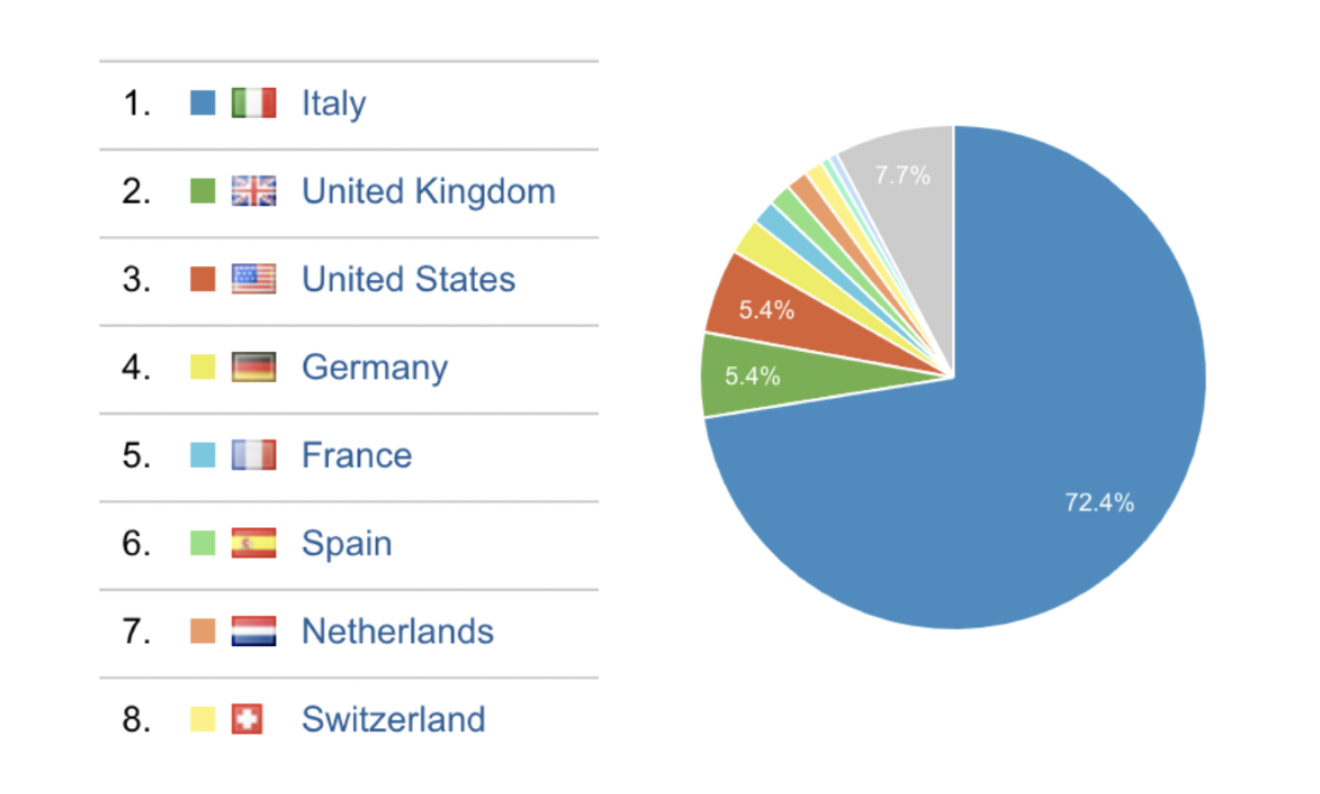

Too many steps and too much expectation on refresh to happen quickly, running that manually was not an option, also considering that we are also still working at that time. Owning HCL Workload Automation, we have automation in our DNA and the availability of the right tool. So we have created our Job Stream, with 3 Executable jobs and a RESTful job The Job Stream is scheduled every day at 6 PM CET. The first job CHECK_SOURCE runs the check_submodule.sh script that runs git pull and a git submodule update to download the latest updates from the Civil Protection repository, it then checks for the existence of the file with the today’s date, it exits with RC 0 only in this case. This is used in a “Job condition met” Start Condition for the Job Stream, set to retry every 5 until the script exits with RC=0. We expect one update per day, so we have checked “Start once” option. The script accept a single parameter with the today’s date in order to perform the check, we use the ${tws.plan.date} to pass the job stream sched date. When the update is available the rest of the job stream is released, the COMBINE_DATA job runs combine.sh script to merge the data we keep for the first days of the spread, when Civil Protection was not collecting yet the data. Then the COMMIT_AND_PUSH data uses git command line to commit the changes and pushing that on our repository on GitHub. At this point we have to update the Google Spreadsheet, the script to reload data and extend formulas runs in Google Apps Scrips running on Google Cloud. In order to call it from HCL Workload Automation we had leveraged the possibility of Apps Scripts to be exposed over https implementing the doGet function. The REFRESH_SHEET job is a RESTful job that calls the GET on Apps Script and receive back the result, in our case we just need it the joblog for troubleshooting, but it’s also possible to extract variables from the result and use them in next jobs. Of course, the whole environment (Master, DWC and agent) runs in containers using dockercompose. IS IT REALLY USEFUL? It is. And not just for Italians. According to usage data, people comes from all over the world. There is of course a bigger traffic from Italy, but other countries very close to ours and currently facing a similar situation as the one we had some weeks ago, are looking at these data: Moreover, people tend to come back to check the situation:

30 Comments

Vikas Malik

3/17/2020 06:38:31 pm

This is awesome piece od auromation. Excellent ....

Reply

Emanuela Zaccone

3/19/2020 06:24:10 am

Thank you, we really hope this could help understand the real impact of covid19

Reply

Franco Mossotto

3/19/2020 06:31:45 am

I'll contact you on LinkedIn so we can discuss if we can cooperate

Reply

3/23/2020 04:19:52 pm

Bravissimi, mi piace sia il concetto di combattere le fake news con dati affidabili, sia la vostra utile implementazione per tracciare/capire il coronavirus!

Reply

Franco Mossotto

3/24/2020 08:48:30 am

Sull'Italia sulla base dei dati della Protezione Civile non c'è più molto da fare e come è descritto nell'articolo è anche automatizzato.

Reply

Giovanni Sce

3/24/2020 02:38:34 pm

Grazie della risposta, concordo su tutto, vivo negli States and there is no reliable data.

Giovanni Miotto

3/23/2020 07:10:07 pm

Scusate se mi intrometto ma poichè sto apprezzando molto la presentazione dati relativa a covid19, volevo segnalare un bug che altera alcune statistiche relative al trentino alto adige. Infatti i dati percentuali (casi x 100k , e fatalita %) risultano errati in quanto riportano la somma delle due province autonome.

Reply

Franco Mossotto

3/24/2020 08:37:22 am

Grazie, me lo hanno segnalato anche su Twitter, ora dovrebbe essere sistemato

Reply

andrea

3/24/2020 07:00:40 am

scusate, sono un epidemiologo veterinario, potete dirci come calcolate il growth factor ?

Reply

andrea

3/24/2020 07:03:38 am

é semplicemente nuovi casi oggi/nuovi casi ieri ?

Reply

Franco Mossotto

3/24/2020 08:41:45 am

Ho cambiato i nomi perché mi sembrava che generasse confusione.

Nicola

3/28/2020 02:41:29 pm

Avrei bisogno di un chiarimento: la misura "Daily Cases" a che cosa si riferisce? Perche' se guardo il numero di oggi 28/3 riporta a livello nazionale 5974 casi, ma se da github scarico il csv, come "nuovi attualmente positivi" vedo 3651 e non capisco come riconciliare i due valori. Tra parentesi, i nuovi attualmente positivi mi sembra un numero molto indicativo dell'andamento del contagio.

Reply

Franco Mossotto

3/31/2020 04:25:57 am

I "Daily Cases" le persone risultate positive al test quel giorno, i nuovi casi, che vanno quindi ad incrementare i "Total Cases"

Reply

Franco Mossotto

4/10/2020 06:40:59 am

Dashboard e dati interessanti.

Reply

Marco

4/12/2020 02:18:37 am

Buongiorno,come si calcola Fatality on all cases e Positive test ?

Reply

Franco Mossotto

4/14/2020 03:05:37 am

Buongiorno,

Reply

Fabrizio

4/13/2020 02:58:54 pm

Salve, è dall'inizio dell'epidemia che seguo la vostra dashboard perchè mi permette di capire molto bene l'andamento della malattia nel paese e nella mia regione; ho notato però che il numero di positivi che ogni giorno si aggiungono ai già malati, dipende molto dal numero di test che vengono fatti, e questo genera delle fluttuazioni nel grafico dei positivi giornalieri, che di fatto ne alterano la leggibilità. Ho pensato che calcolando la percentuale di test positivi giornalieri e applicandola a un numero "standard" di tamponi al giorno, ogni giorno, si può avere un andamento più lineare, eliminando le fluttuazioni citate sopra. Naturalmente questo non si baserebbe su dati reali, ma potrebbe aiutare a capire quello che è il reale andamento del virus.

Reply

Franco Mossotto

4/14/2020 03:13:28 am

Ha perfettamente ragione, e se ne è parlato molto, per esempio su Il Post.

Reply

Fabrizio

4/14/2020 07:21:29 am

Va bene, la ringrazio molto per la sua disponibilità.

Reply

eugenio

4/20/2020 10:53:09 am

Grazie per questa notevole tabella, che da un mese a questa parte è il mio principale riferimento.

Reply

Franco Mossotto

4/20/2020 11:28:12 am

Sto utilizzando 2 Growth Factors:

Reply

eugenio

4/21/2020 05:06:10 am

Scusi il ritardo nella risposta.

Reply

Franco Mossotto

4/21/2020 06:47:13 am

Sì, in effetti il numero dei casi totali forniti dalla protezione civile il 9 Marzo ed il 27 Febbraio per il Piemonte erano inferiori a quelli del giorno precedente, da cui un Daily Cases negativo, da cui un growth factor negativo.

Reply

eugenio

4/21/2020 07:52:34 am

Ok, grazie. 4/24/2020 01:34:34 pm

i dati odierni 24 aprile 2020 x persone con test e totale tamponi sono errati, probabilmente invertiti

Franco Mossotto

4/27/2020 02:48:24 am

Sì, la protezione civile li aveva inizialmente invertiti, e poi sistemati

Giuseppe Cassano

4/30/2020 06:25:04 am

1) Meraviglioso!

Reply

Franco Mossotto

4/30/2020 09:50:43 am

Sul grafico Closed Cases ci sono % Death e % Recovered, sono cumulativi, non sul dato giornaliero.

Reply

Your comment will be posted after it is approved.

Leave a Reply. |

Archives

July 2024

Categories

All

|

RSS Feed

RSS Feed