|

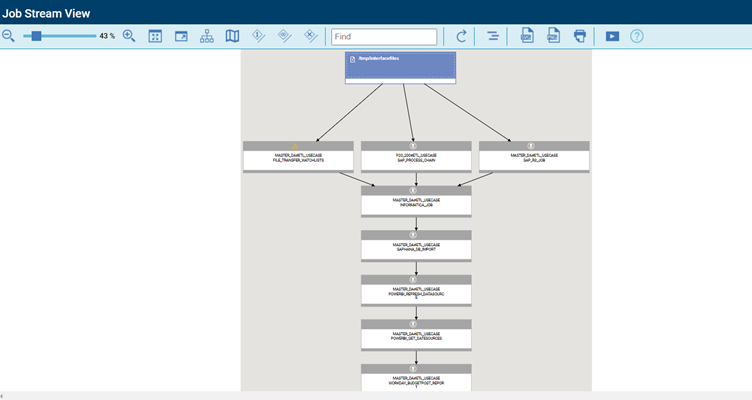

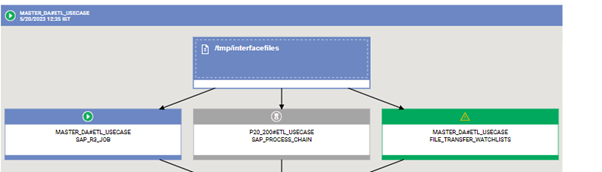

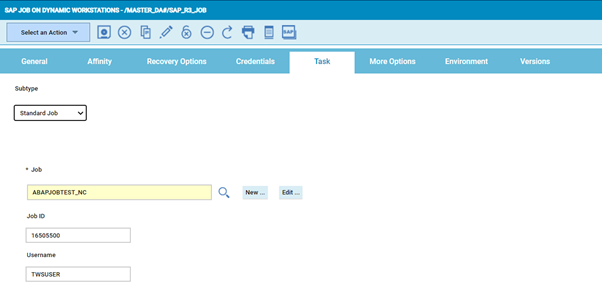

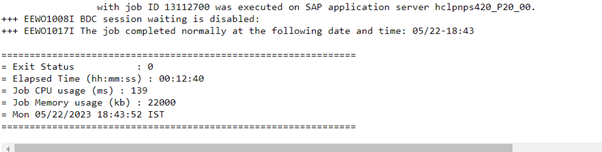

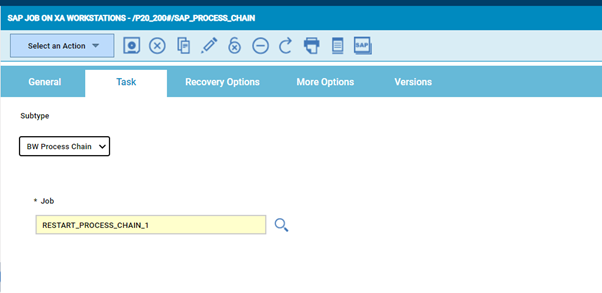

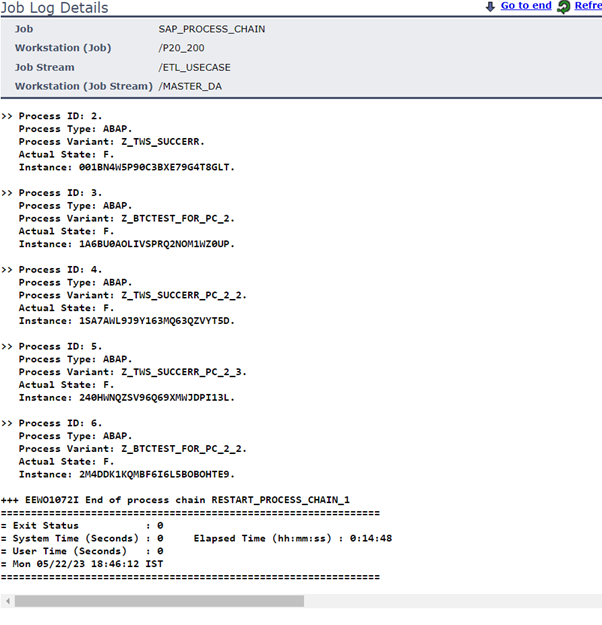

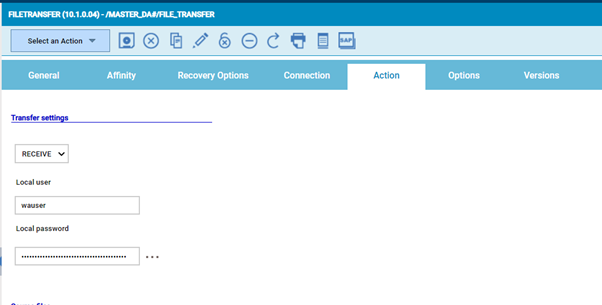

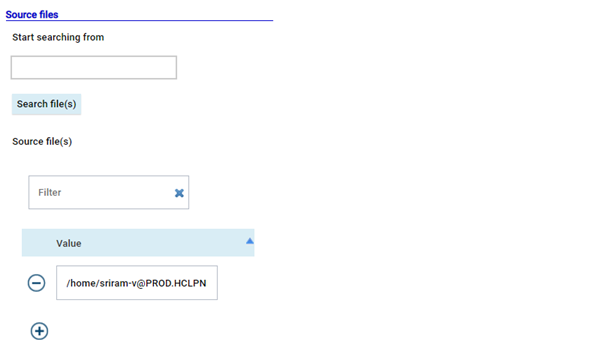

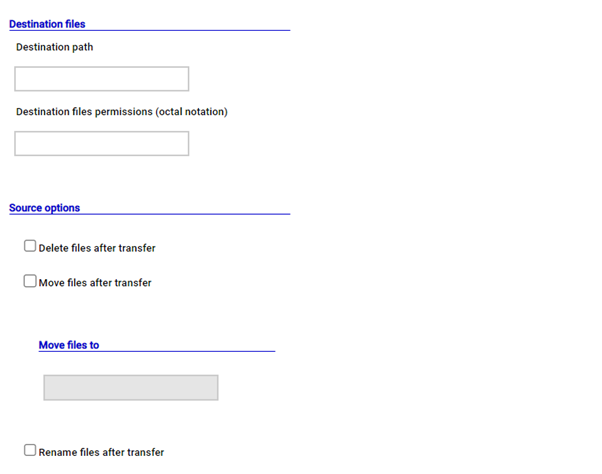

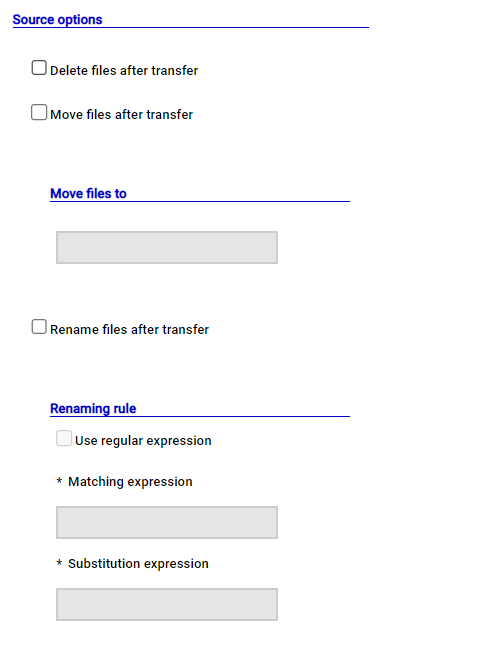

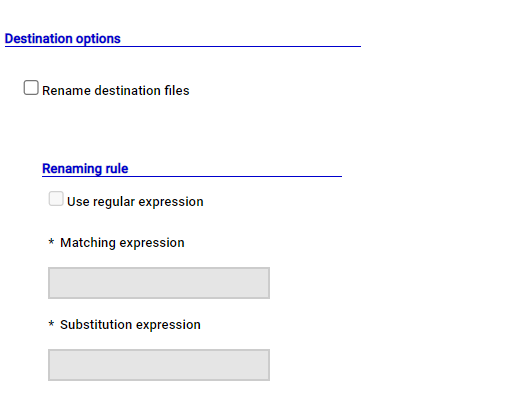

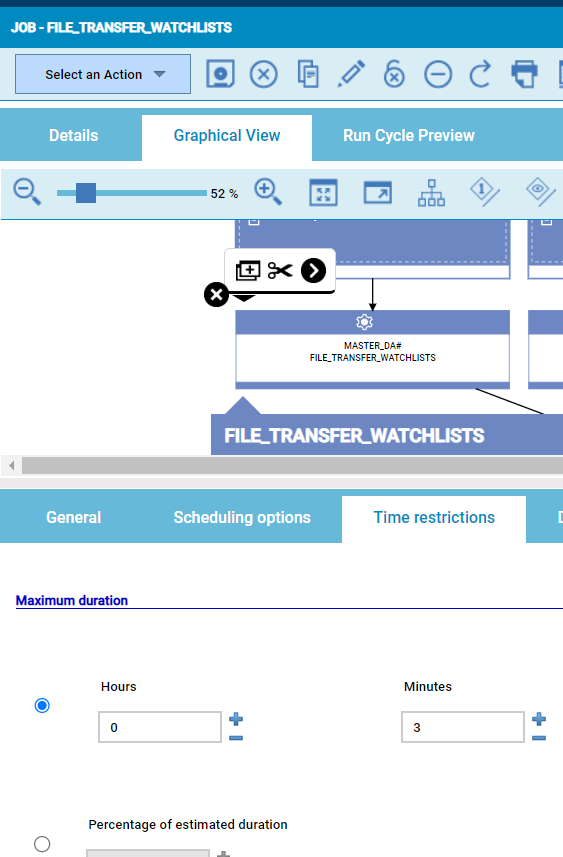

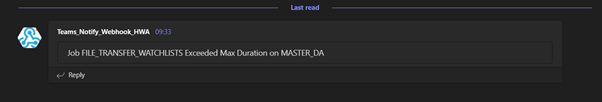

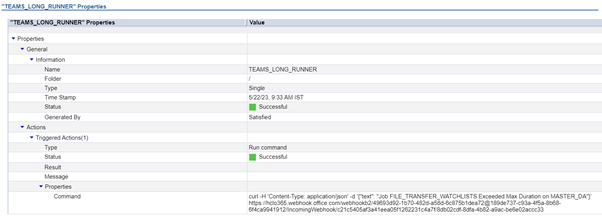

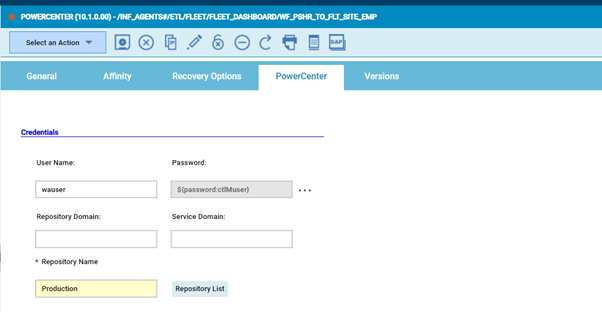

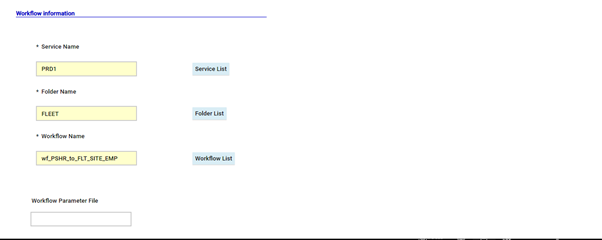

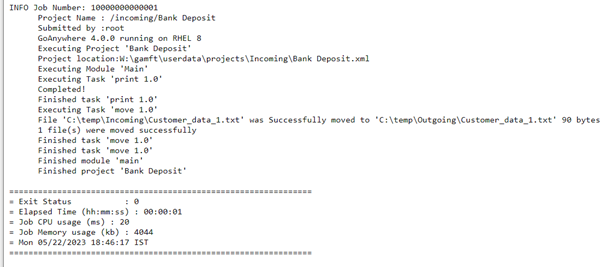

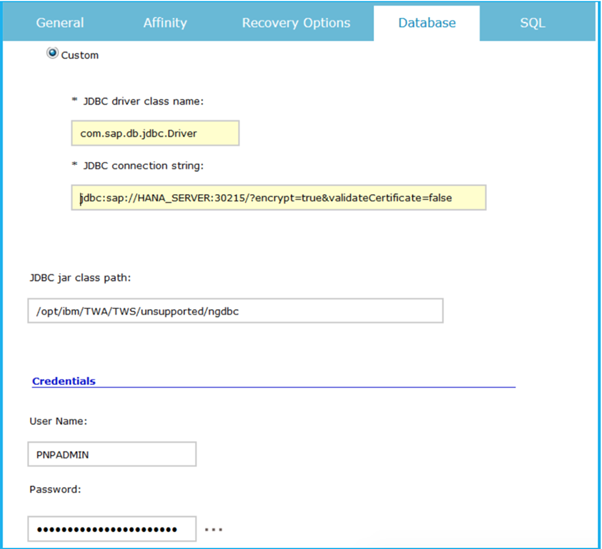

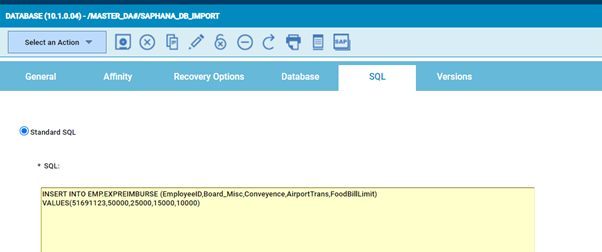

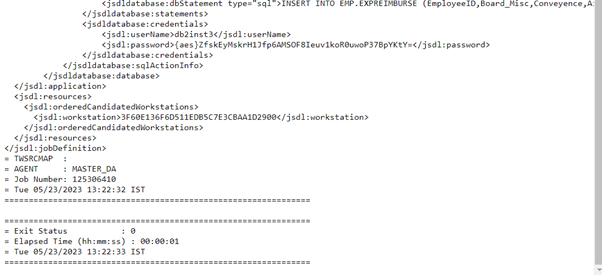

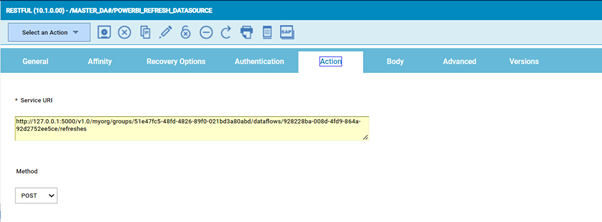

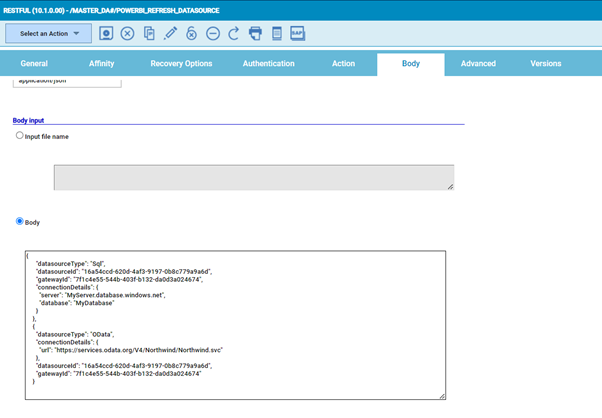

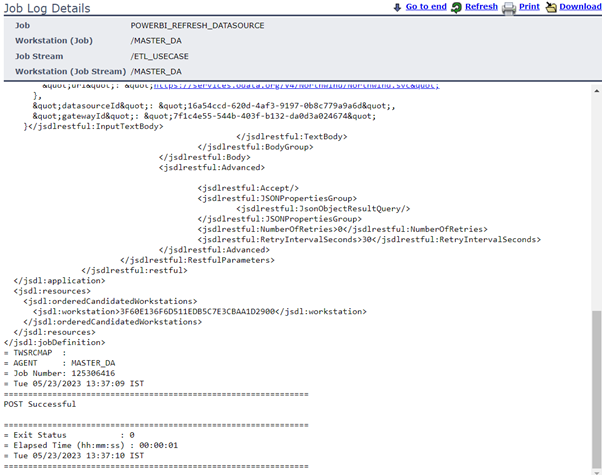

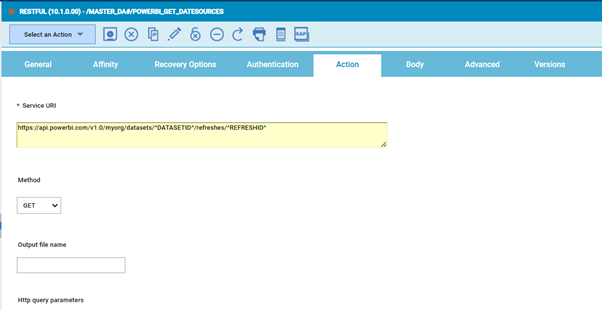

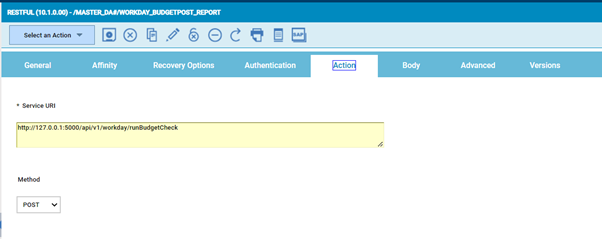

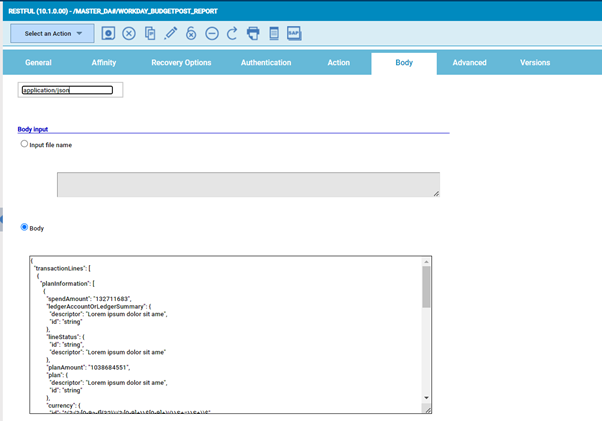

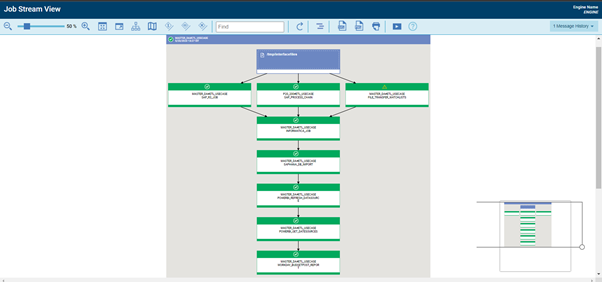

In this Blog, we would go through a Complete End to End Use Case of Data Pipeline Orchestration Scenario over HCL Workload Automation where we would have data coming in from Multiple Source Systems and detection of files and processing of Source Files would take place in different Systems, post Processing, we would have the Data Transformation done via Data Staging/Transformation Platform followed by ingestion of the Transformed Data into a Data Warehouse. Once Data is ingested into the Data Warehouse, we could run Analytics on it through any of the Popular Analytics Platform, Followed by Querying on Status of the Analytics Step run to get its Response and then run Reports from a Reporting Tool. Customer Requirement: A Customer is needing to Orchestrate his Data Pipeline End to End and has multiple incoming Interface Files coming from different Source Systems. The Interface Files arrived must be detected in real time and would need to be processed in real time in different Systems. Customer uses SAP as their Main ERP and has an SAP R/3 System to process an incoming Interface file with an ABAP Program passing a Variant and a Step User. Customer also has a SAP Process Chain to be run also as part of the Flow to process an incoming File. There’s also a File Transfer Step which would fetch one of the Source Files and import into another Target. Once the Data processing is done, there is a need to transform the output generated and Customer uses Informatica as the Data Transformation Tool here. The Transformed Data also needs to ingest into a Data Warehouse, Customer uses SAP DataWarehouse for this Purpose on SAP HANA DB. There is also a need to run Analytics on top of the Data Ingested and here Customer uses PowerBI as the main Product to run Analytics on top of the ingested Data, PowerBI is used to refresh the DataSource here. The Status of the Refreshed Data Source needs to be fetched, so this needs to ascertain the Refresh DataSource Step Finished Successfully. Post the Status Check, there is also a need to run Reports using Workday Application which can then be mailed out to the Users. Failure on any Step needs Alerts to be sent out in MS Teams and there’s also a need to alert on MS Teams if the File Transfer Step takes longer than 3 minutes during processing. Solution: HCL Workload Automation would act as the main Orchestrator here to orchestrate this Data Pipeline End to End cutting across Multiple Applications to run different Jobs in the Flow and would also detect Source Files from different Source Systems in real time while processing them and then using various Plugins available within the Product to readily connect to each Application and running individual Steps , while also tracking the Steps in real time and alert Abends/Failures on MS Teams and also keep track of Long Runners and alert in MS Teams in real time , if needed Recovery can also be Automated through Conditional Branching or through Recovery Jobs or via Event Rules(for the Scope of this Blog , we are currently not covering Automated Recovery). Detecting Source Files: Source Files can be detected easily through Event Rules with the “File Created” with an Action to Submit the Jobstream in real time or realized via File Dependencies to detect files in real time. SAP R/3 Batch Job: The SAP R/3 Batch job consists of running an ABAP Program with a Variant and Step user, you can also pass Printer Parameters like New Spool Request, Spool Recipients, Printer Formats etc. alongwith all typical Archiving Parameters you would find within SM36.They could be Multi Step SAP Jobs also. HWA would fetch the SAP Joblog from within SAP in real time into the local Joblog within HWA: SAP Process Chain Job: An SAP Process Chain job is to run BW SAP Process Chains with the option of passing the Execution User also within to trigger the BW Process Chain: File Transfer Job: A File Transfer Job can be used to trigger File Transfers to and From External third parties or internally between two Different Servers, you also have the option of leveraging the A2A (Agent to Agent) protocol to do https Transfers from Source to Destination between Agents, you also have options for Regular Expression Mappings, Archival at Source, Archival at Destination with Date and Time Stamps etc. In this case, the File Transfer Step runs long and goes past Max Duration set to 3 Mins for this particular Job: This results in a Notification/Alert sent to MS Teams via an Event Rule Informatica Job: The Data Transformation is done via an Informatica Step where the Informatica PowerCenter Workflow is run from an Informatica Folder, also mentioning the Service Domain, Repository Domain, Repository Name etc. The Joblog depicting the Transformation within Informatica: SAP HANA Job: SAP HANA DB Job is used to Query the DB or run a Stored Procedure against a SAP HANA DB, also mentioning the SAP HANA ngdbc Driver in the Job Definition, the job would do the DB Import into SAP HANA DB in the below way: Post the import in the Database, we could run Analytics on top of the Data ingested into the Database. PowerBI Refresh DataSource: The next step post the Import into the HANA DB is to refresh a Power BI Data Source, this can be accomplished through a RESTFUL Job within HWA. A Restful Job can be used to call a Service URL to do a https post (in this case Post) by passing a JSON Body to be posted in the Body of the Job either via a File or directly within the Body field: Get Status of PowerBI Refresh: The Status of the previous Step is pulled through another RESTFUL job which would do a https GET on the Status of the previous Step passing the DATASETID and REFRESHID as Variables from previous step: Workday Reports: Reports are run in Workday Application leveraging again a RESTFUL Post Job passing the JSON Body directly in the Body Field of the Job: Overall Status of the Data Pipeline: Using the Graphical Views of the Product, it's easy to track this entire process in real time and also get alerted in case of any Failures or Long Runners directly over MS Teams/Ticketing Tool/Email/SMS. Advantages of running Data Pipeline Orchestration over HWA:

Authors Bio

0 Comments

Your comment will be posted after it is approved.

Leave a Reply. |

Archives

July 2024

Categories

All

|

RSS Feed

RSS Feed