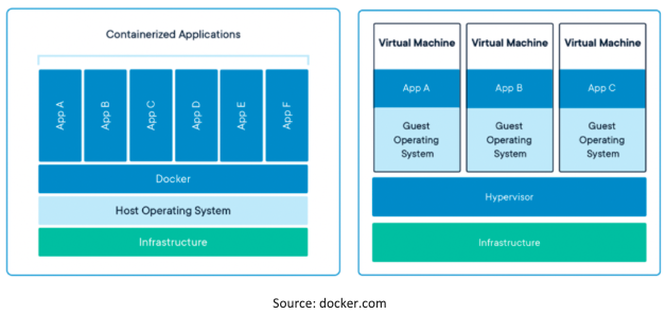

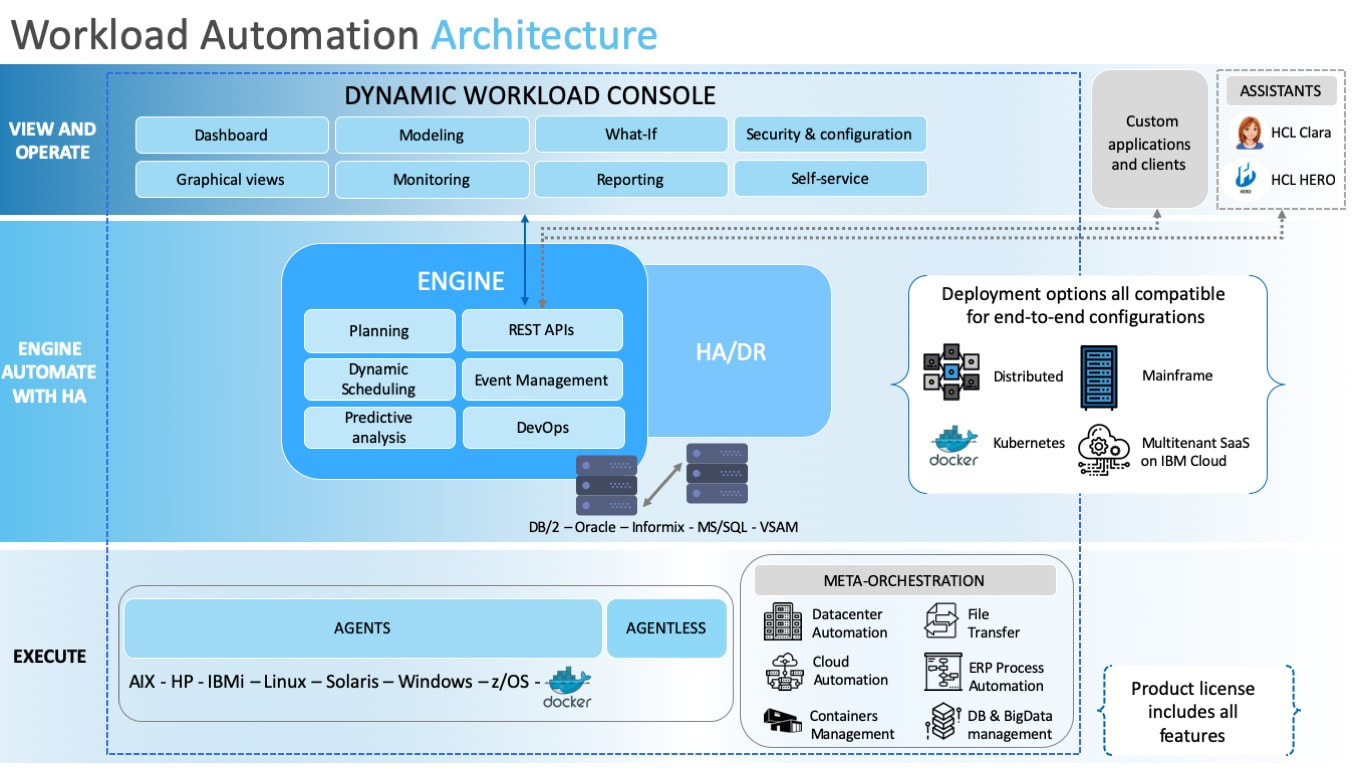

All the questions you never dared ask about containers, Docker and Kubernetes You have heard a lot about containers in the last years, but you still do not know how you can leverage them with Workload Automation? This is the right place to start then. We collected the most frequent questions our users do when we talk about containers. 1. What we talk about when we talk about containers What are these “containers” anyway? According to docker.com, “Containers are an abstraction at the app layer that packages code and dependencies together. Multiple containers can run on the same machine and share the OS kernel with other containers, each running as isolated processes in user space. Containers take up less space than VMs (container images are typically tens of MBs in size), can handle more applications and require fewer VMs and Operating systems.” So, are containers and virtual machine the same thing? Nope. Again, as stated on docker.com “Virtual machines (VMs) are an abstraction of physical hardware turning one server into many servers. The hypervisor allows multiple VMs to run on a single machine. Each VM includes a full copy of an operating system, the application, necessary binaries and libraries - taking up tens of GBs. VMs can also be slow to boot.” Take a look at the difference between containerized applications and virtual machines: What’s the difference between containers and docker? With Docker technology, users can develop, deploy and run containerized applications. Thanks to Docker containers, we can package the application and all of its dependencies and have it ready-to-run anywhere. You do not need to worry about the operating system used, the libraries, the dependencies. And what about Kubernetes? Welcome to the automation domain. As per its own definition, “Kubernetes is a portable, extensible, open-source platform for managing containerized workloads and services, that facilitates both declarative configuration and automation. It has a large, rapidly growing ecosystem. Kubernetes services, support, and tools are widely available.” originally launched by Google, the project was open sourced in 2014. While Docker and Docker compose could be great to run containers in a development environment, when we talk about large, high available, multi-tenant production environments deployed on clusters, we talk about Kubernetes. Like an operating system kernel allocate different processes and threads on the available CPUs, so Kubernetes distributes the containers on different servers called nodes, eventually starting multiple instances to scale up the application. It also monitors the availability and health of containers, restarting them in case of container or node failure, it makes the containers easily accessible despite their actual location using an internal DNS, routes and load balances external connections to the containers, takes care of attach persistent volumes based on containers need, and much more. Kubernetes is available as the core open source project, or many commercial offerings like RedHat OpenShift, or available on public clouds like IBM Cloud, Google Kubernetes Engine, Amazon EKS, Azure Kubernetes Service (AKS). 2. Why is Workload Automation relevant Wondering why you should care about workloads automation? Workload Automation is the platform to schedule, initiate, run and manage digital business processes from end to end, automatically. Thanks to it, business processing can take place without human intervention. Plus, the solution is available on mainframe, virtualized (on-premise), cloud and hybrid environments. Workload Automation is then a complete solution for batch, real-time IT, and application workload management. It increases productivity with powerful plan and event-driven scheduling and runs and monitors workloads at any location. The platform provides a single point of control for application developers, IT administrators and operators, providing them both autonomy and precise governance through a centralized access control and auditing. So, wherever there is a process to automate there is the need for workload automation to manage it. Containers are definitely Workload Automation’s best friends. Take a look at how the architecture of Workload Automation is organized and how Docker fits in it: Workload Automation is basically made of 3 layers, every component can run on containers, physical or virtual machines, cloud platform, supporting also hybrid cloud environments.

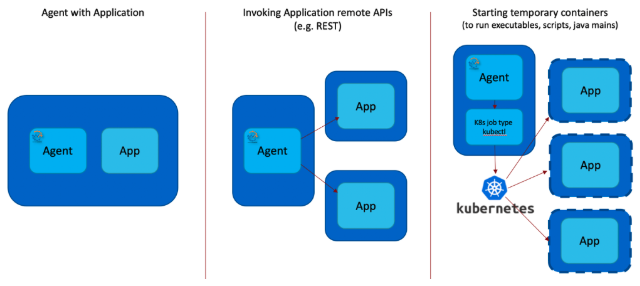

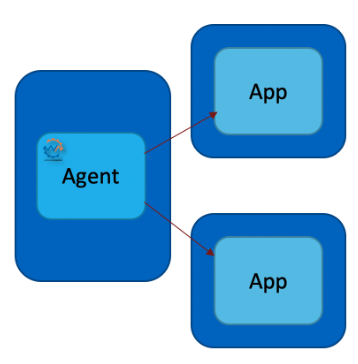

Can I then schedule containers with Workload Automation agent in container? Definitely yes. And you can even choose among three methods. Agent with application In this case, both the agent and the app are in the same container. Actually, this is not the best option out there, since cons are definitely more – and with more constraints – than pros: Pro

Cons

The agent and the apps are each in separated containers and the applications are invoked via remote APIs (e.g. REST, jdbc, etc..). With this solution, the only cons are related to the need of application exposing APIs and having the containers always active. Let’s recap: Pro

Cons

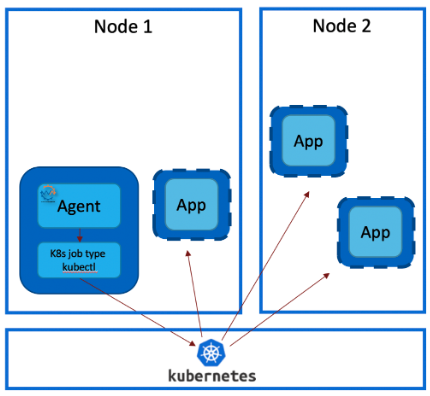

Here come containers at their best and the power of Kubernetes unleashes to make the most out of K8 clusters. Kubernetes can manage scaling, automatic failover and more, so no need to worry. Pro

Cons

Sounds great, we want to hear your stories! What did you like the most about them? How did they improve or change the way you manage your workloads? And if you still have doubts, just leave us a comment below. This article is deployed into a container and will auto-destroy after reading it. Share it before it’s too late! docker run --rm my-article

0 Comments

Your comment will be posted after it is approved.

Leave a Reply. |

Archives

July 2024

Categories

All

|

RSS Feed

RSS Feed