|

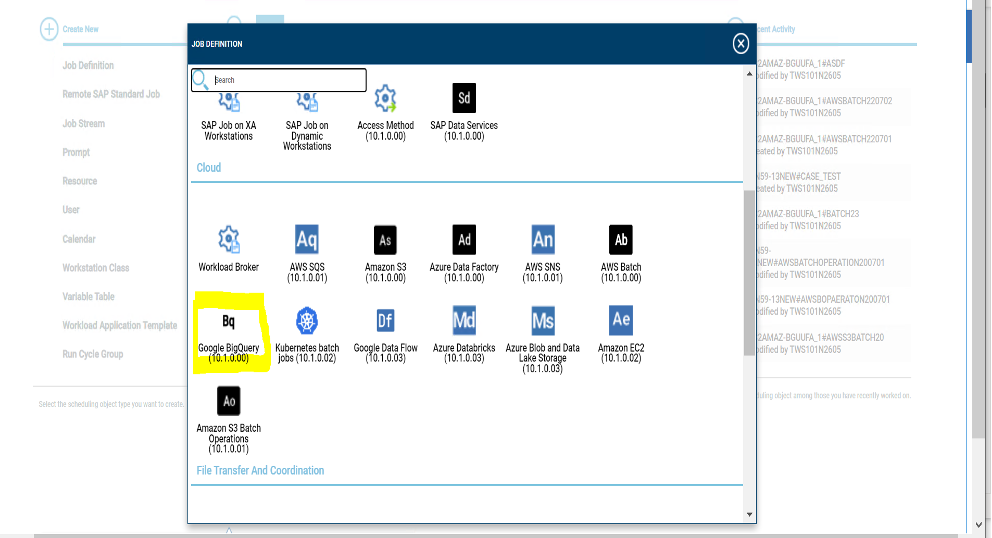

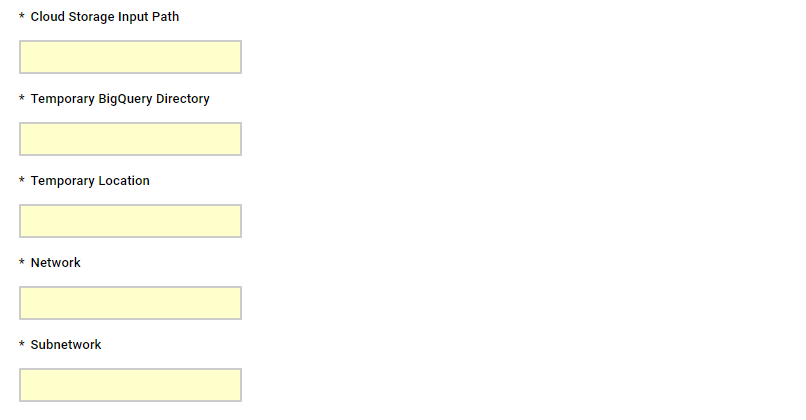

Let us begin with understanding of Google Cloud dataflow what it is all about before moving to our GCP Cloud Dataflow plugin and how it benefits to our workload automation users. Data is generated in real-time from websites, mobile apps, IoT devices and other workloads. Capturing, processing, and analyzing this data is a priority for all businesses. However, data from these systems is not often in the format that is conducive for analysis or for effective use by downstream systems. That’s where Dataflow comes in! dataflow is used for processing and enriching batch or stream data for use cases such as analysis, machine learning or data warehousing. Dataflow templates offer a collection of pre-built templates with an option to create your own custom ones! Here in workload automation, you can implement Text files on Cloud Storage to BigQuery template. The Text Files on Cloud Storage to BigQuery pipeline is a batch pipeline that allows you to stream text files stored in Cloud Storage, transform them using a JavaScript User Defined Function (UDF) that you provide, and append the results to BigQuery. Let us now understand the plugin part with job definition parameters: Log in to the Dynamic Workload Console and open the Workload Designer. Choose to create a new job and select “GCP Cloud Dataflow” job type in the Cloud section. Figure 1: Job Definition Page Connection Establishing connection to the Google Cloud server: Use this section to connect to the Google Cloud in two ways.

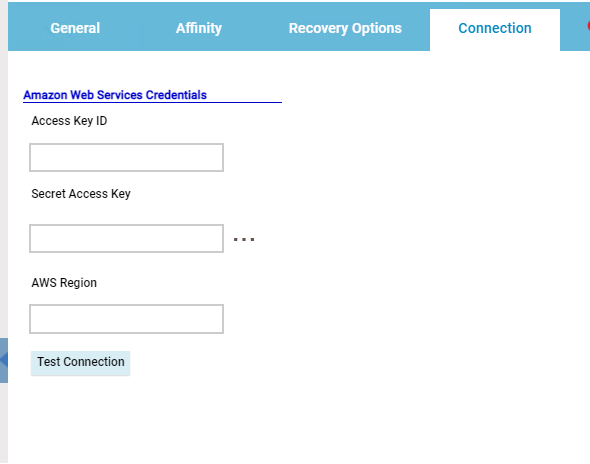

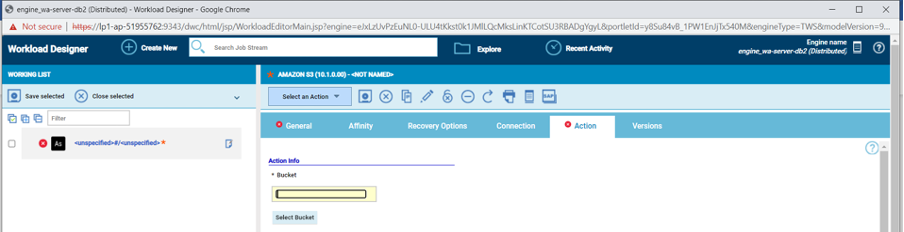

Service Account - The service account associated to your GCS account. Click the Select button to choose the service account in the cloud console. Project ID - The project ID is a unique name associated with each project. It is mandatory and unique for each service account. Test Connection - Click to verify if the connection to the Google Cloud works correctly. Figure 2: Connection Page Action In Action tab specify the bucket name and operation which you want to perform.

Batch pipeline. Reads text files stored in Cloud Storage, transforms them using a JavaScript user-defined function (UDF), and outputs the result to BigQuery.

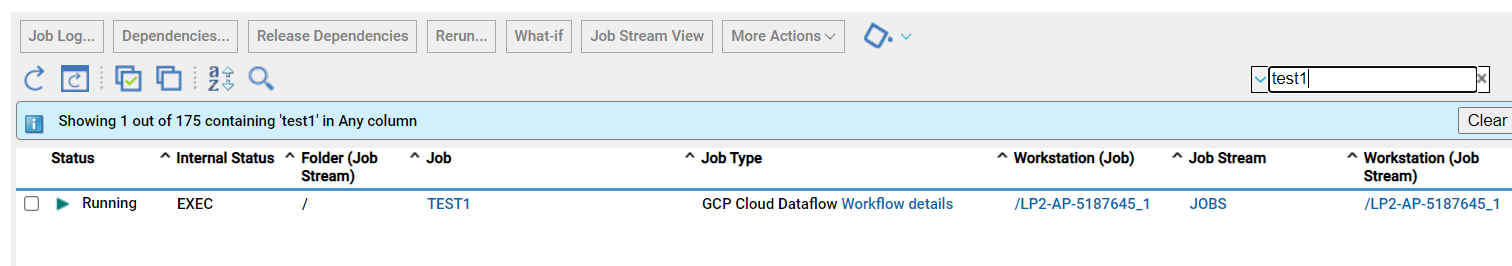

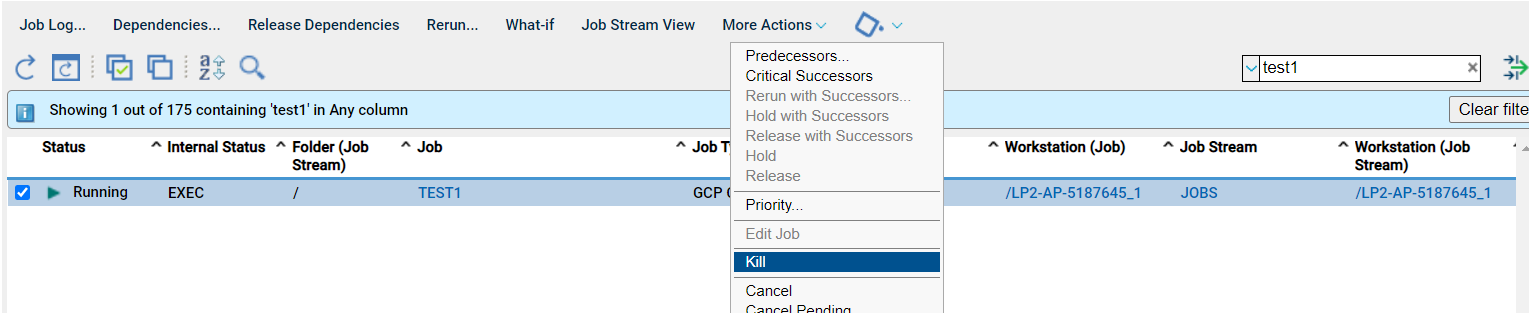

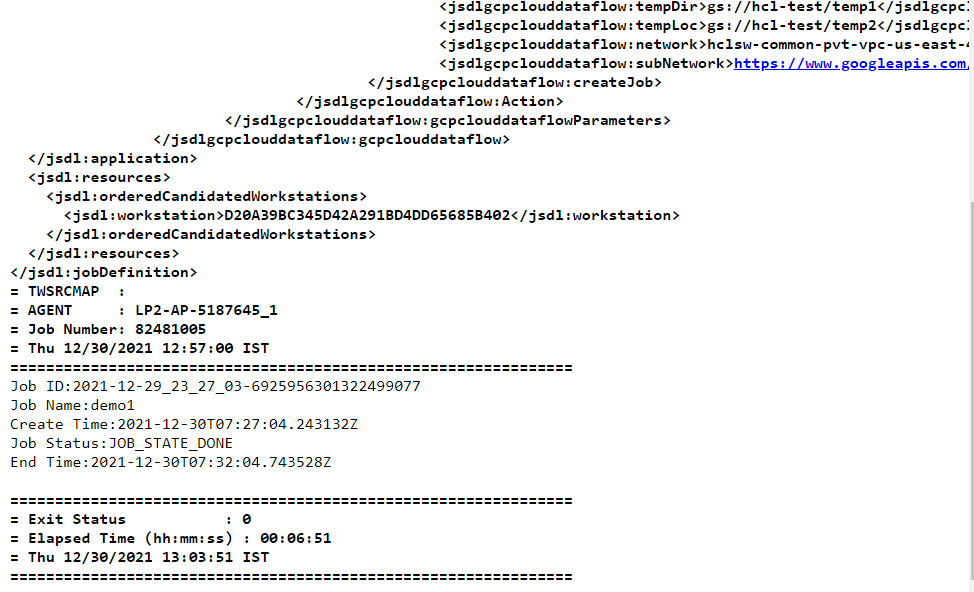

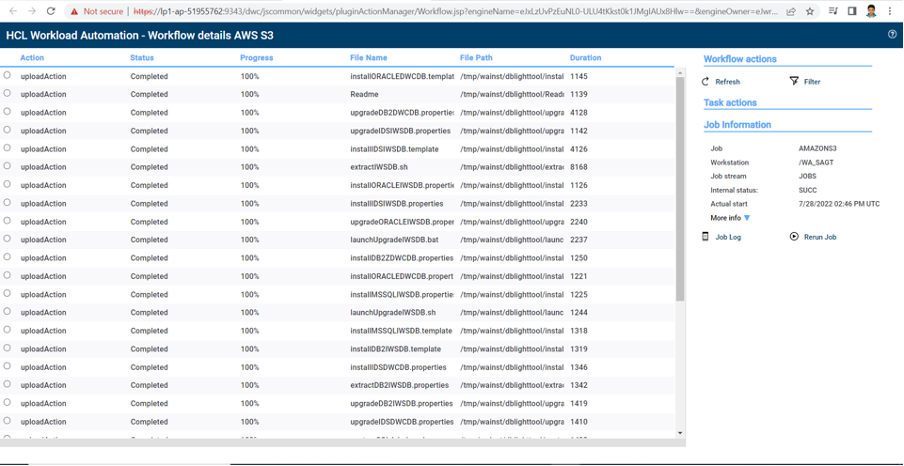

Figure 3: Action Page Submitting your job It is time to Submit your job into the current plan. You can add your job to the job stream that automates your business process flow. Select the action menu in the top-left corner of the job definition panel and click on Submit Job into Current Plan. A confirmation message is displayed, and you can switch to the Monitoring view to see what is going on. Figure 4: Submit Job Into Current Plan Page Monitor Page Figure 5: Monitor Page Users can cancel the running the job by clicking kill option. Job Log Details Figure 6: Job Log Page WorkFlow Page Figure 7: Workflow Details Page Are you curious to try out the GCP Cloud Dataflow plugin? Download the integrations from the Automation Hub and get started or drop a line at [email protected]. Authors Bio

0 Comments

Your comment will be posted after it is approved.

Leave a Reply. |

Archives

July 2024

Categories

All

|

RSS Feed

RSS Feed