Quantitative evaluation of the scalability of the Workload Automation system with a web UI front-end12/28/2022 This article shows an approach for the quantitative evaluation of the scalability of a system with a web UI front-end, which has been applied to the HCL Workload Automation product during the performance verification test phase. The article is divided into the following sections:

Introduction A generic approach to evaluate the scalability of a system could consist of the following tasks:

This approach can be used to perform a quantitative assessment of the scalability of a system by building a simple analytical model using Little's law and the “Universal Scalability Law” (USL). There are many available load generation tools that can be used to execute load tests, with different load levels, against a system with a web UI. In the example shown later, the tool used is HCL OneTest Performance. While tasks from 1) to 3) depend on the specific system under test, tasks 4) and 5) can be described in general terms. The proposed approach for tasks 4) and 5) is explained using the Dynamic Workload Console (DWC), a web-based user interface for HCL Workload Automation (WA), as an example of a system with a web UI. Quantifying Scalability When running load tests against a system, the three performance metrics that need to be collected and analyzed are:

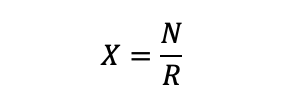

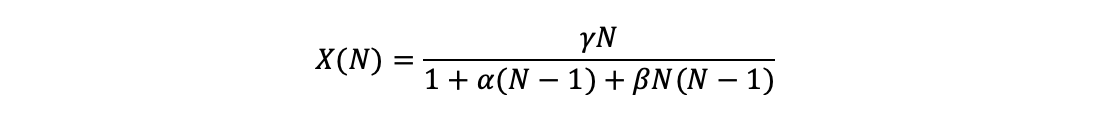

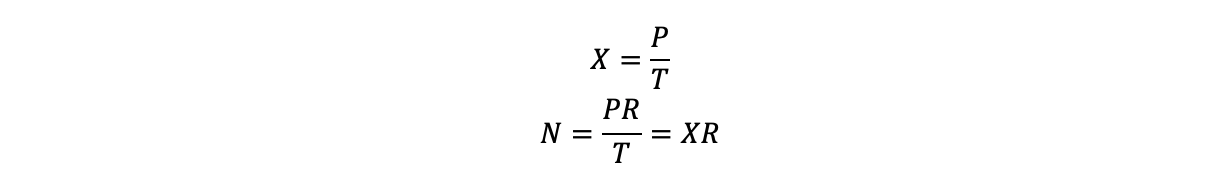

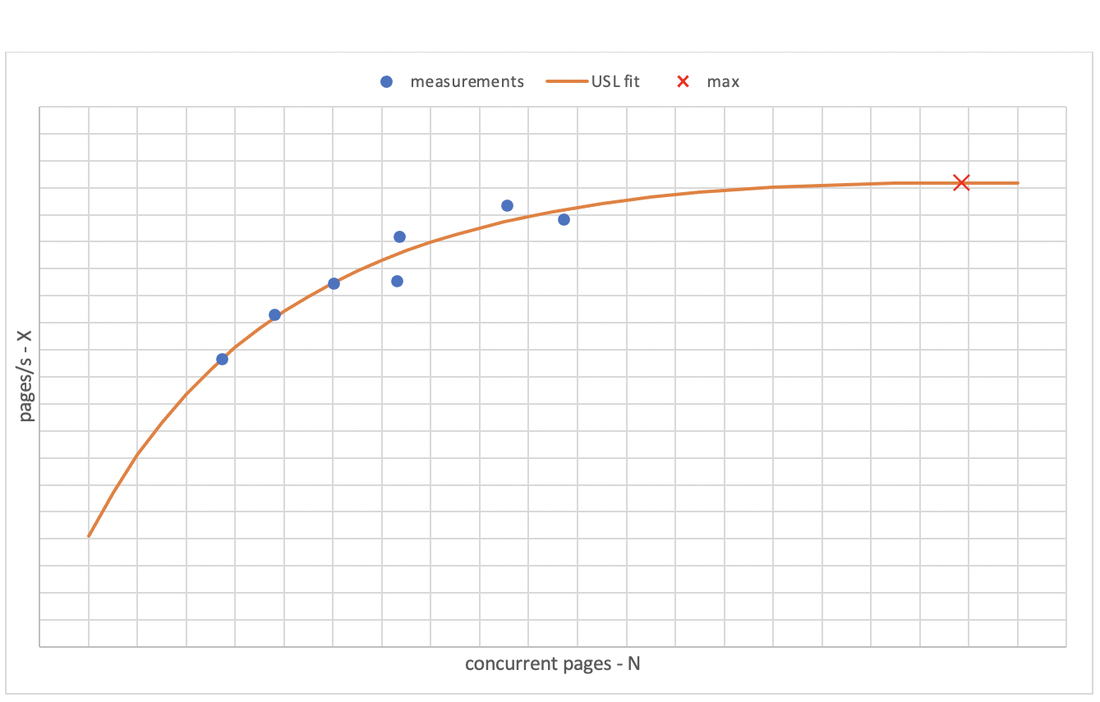

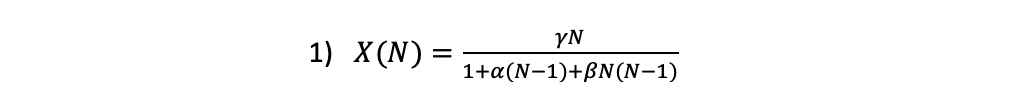

For each test at a given load level, the load must be applied to the system long enough for the it to reach a stationary state, and to collect a statistically significant number of samples in this steady state. For a system in steady state, the long-term average values of the three metrics obey to Little's law: Little’s law can be used to calculate the value of one of the three metrics when only two of the other values are available. In many cases, the measured or calculated values of X and N at the different load levels (X1, N1, X2, N2, … Xn, Nn), will follow the “Universal Scalability Law” (USL): Fitting the USL to the measured values of X and N allows for the calculation of the three coefficients:

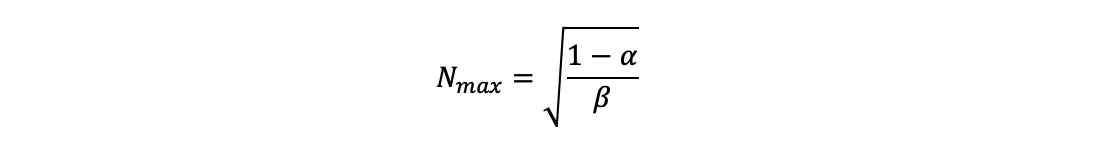

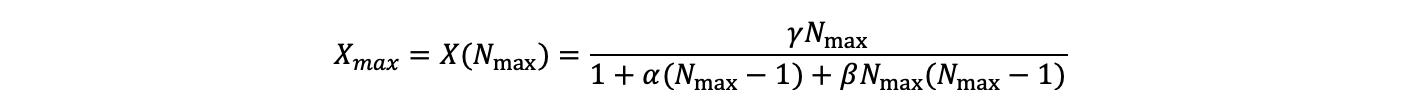

The values of α, β, γ quantify the scalability of the system under test. The theoretical maximum concurrency level (Nmax) can be determined with the formula: From this value, the theoretical maximum throughput (Xmax) can be calculated using the fitted USL: Once a set of at least 6 (the more, the better) couples of values (X, N) has been determined, one for each test run at a given load level, it is possible to fit the USL to them; for instance using Excel’s Solver add-in as explained in Appendix: Using Excel’s Solver add-in to fit the USL to performance data. The Universal Scalability Law is a simple, black-box approach that models the system as a whole. It is based on simple relationships that hold true in most conditions. As such, it has limitations: for example, the USL carries no notion of heterogeneous workloads, like those occurring in a production environment, which involve different user types executing different business functions. Nevertheless, even in some such cases, the USL has been applied successfully, allowing the definition of a simple, quantitative model of a system’s scalability. Generating variable load HCL OneTest Performance is a workload generator tool, based on Rational Performance Tester, for automating load and scalability testing of web, ERP, and server-based software applications. It captures the network traffic that is rendered when the application under test interacts with a server. This network traffic is then emulated on multiple virtual users while playing back the test. During playback, server response times, throughput, and other measurements are collected, and related reports are generated. Load tests for a web application are built by recording a set of actions performed on the web UI itself, using a browser configured to have OneTest Performance capture all the data flowing between the browser and the application. A recording creates multiple HTTP requests and responses, which will be grouped by OneTest Performance into “HTTP pages”. Each HTTP page contains a list of the requests recorded for that page, named after their web addresses. A HTTP request contains the corresponding response data. Collectively, requests and responses listed inside a HTTP page are responsible for everything that was returned by the web server for that page. By defining user groups, related tests can be grouped, and run (i.e., played back) in parallel: tests belonging to different user groups run in parallel. By adding a test to a Schedule, it is possible to emulate the action of an individual user. A schedule can be as simple as one virtual user or one iteration running one test, or as complicated as hundreds of virtual users or iteration rates in different groups, each running different tests at different times. A schedule can be used to control tests, and the load level they generate against the system, in the following ways:

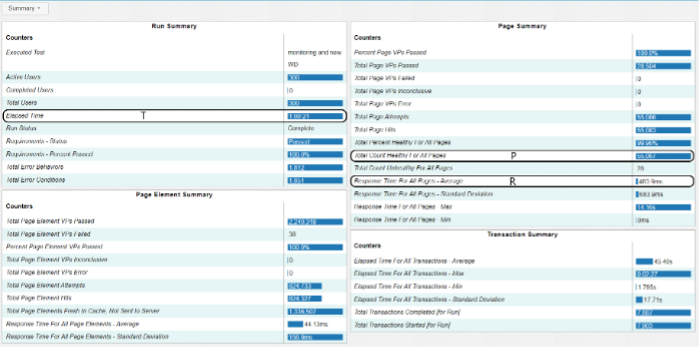

Example application The Dynamic Workload Console (DWC) is a web-based user interface for HCL Workload Automation (WA). OneTest Performance can be used to record and play back tests for DWC. After the execution of a test schedule, at a specified load level, is completed, OneTest Performance will generate a report with a summary page like the following: Numbers shown above are just an example and are not meant to document a specific DWC performance level) The performance metrics X and N can be calculated from the summary report using the values of:

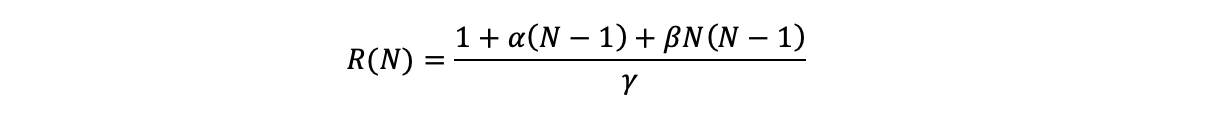

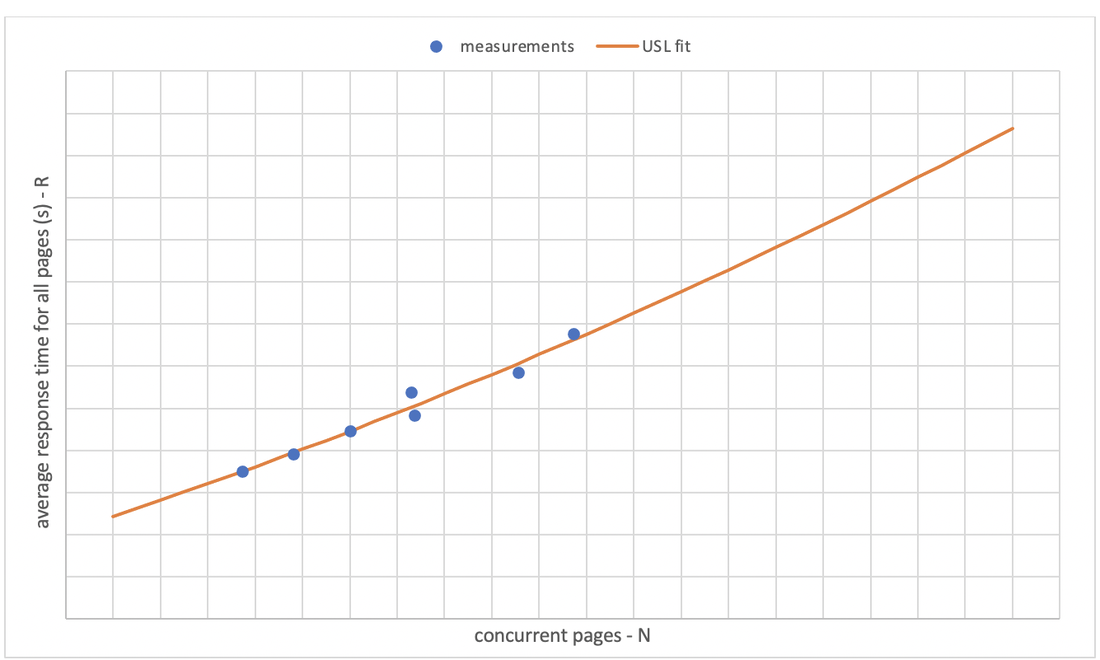

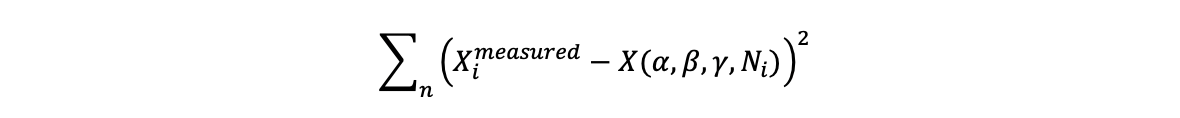

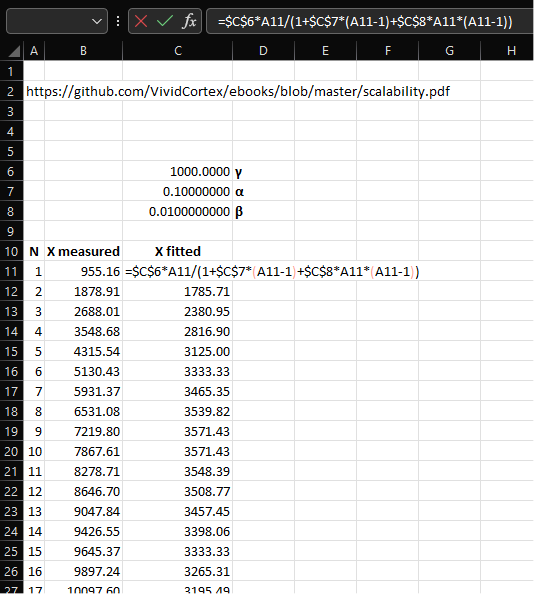

Given the value of T, P, and R, X and N can be calculated using: Running the same test multiple times, each time configuring the schedule with a different number of users or with different rates, allows to measure different values for (X, N). As an example, the following chart shows a set of 7 measurements (X, N), corresponding to 7 different runs of the same test schedule, and the corresponding USL fit: Once the values of α, β, γ are available, it is also possible to model the relationship between average response time (R) and concurrency (N), by application of Little’s law and USL: The following chart shows an example for the same data as above: Conclusion This article shows how to build a simple quantitative scalability model of a system with a web UI front-end using a workload generator tool. As an example, HCL OneTest Performance can be employed to collect performance data for a system like DWC; these data can then be used to build such a model. Despite its simplicity and limitations, the USL provides a quantitative framework for:

Appendix: Using Excel’s Solver add-in to fit the USL to performance data Using the Universal Scalability Law (USL) to quantify the scalability of a system requires the estimation of the three coefficients α, β, γ for the equation: This is achieved by fitting the curve X(N) to the measured performance data (N, X) using non-linear least squares regression analysis. In addition to general purpose libraries that implement some of the algorithms commonly used to perform non-linear least squares regression analysis (e.g., the least squares package in Apache Commons Math or the optim package for GNU Octave), there are also freely available programs that can be used to carry out the computation; for example:

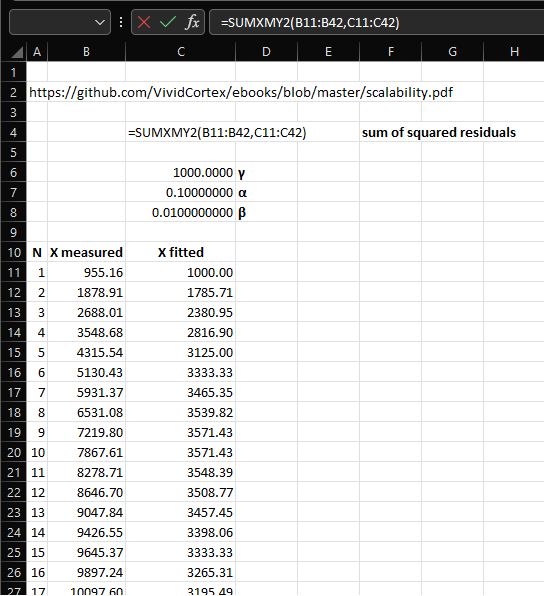

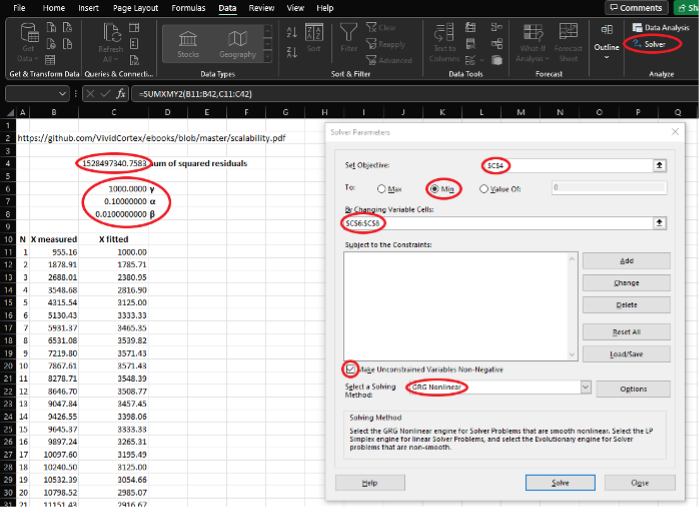

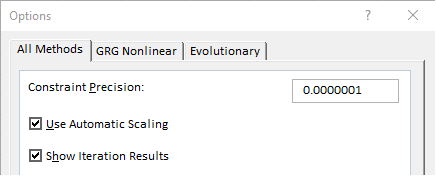

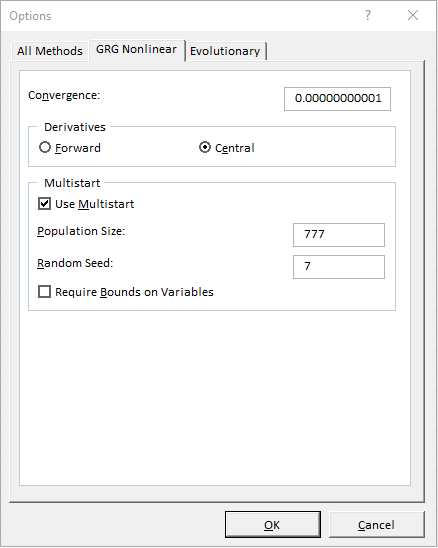

However, if the number of measurements to fit is not large, it also possible to estimate α, β, γ using Microsoft Excel’s Solver add-in, without writing any code. The steps to calculate the values of α, β, γ that fit the measurements are:

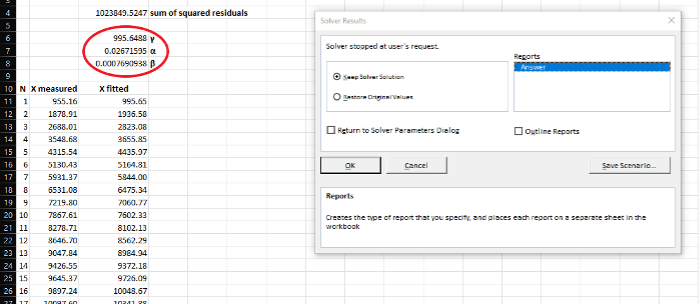

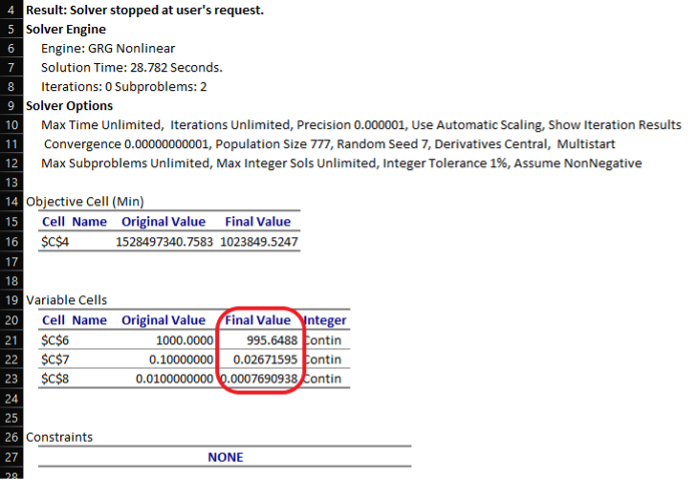

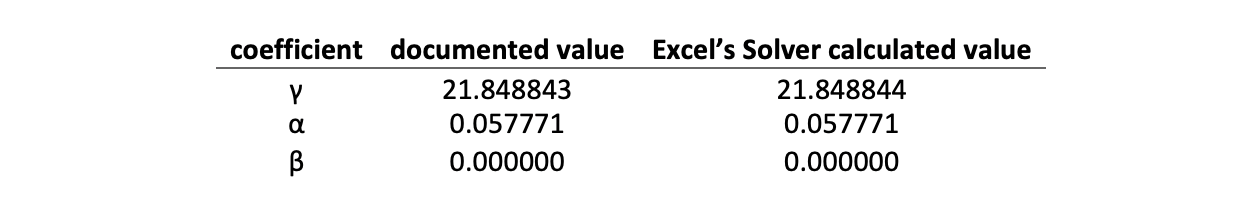

A similar approach can be used with LibreOffice Calc’s Solver. To prove the practical applicability of the approach, the following section shows how to use Excel’s Solver to calculate the values of α, β, γ and compares them to the values generated by other methods for the same sets of measurements. Examples with known results (α, β, γ) The book Practical Scalability Analysis with the Universal Scalability Law by Baron Schwartz contains an example application of the USL to the results of running the sysbench benchmark on a Cisco UCS C250 rack server, in chapter “Measuring Scalability” at page 15. The following screenshots show how to use Excel and its Solver add-in to calculate the best-fit values of the three coefficients in the USL formula for the performance data documented in that chapter. After having implemented steps 1, 2, and 3, the spreadsheet should look like this: The next screenshot shows the formula for step 4: The main elements for steps 5, 5a, and 5b are highlighted in the following screenshot: Additional Solver options mentioned in step 5d are shown by the next two screenshots: The next screenshot shows the final state of the spreadsheet, after having stopped the Solver at the first iteration whose result diverged: Excel’s Solver last dialog offers the option to generate an “answer report” (step 7), which consists of a sheet with information about the Solver set-up and execution: The results yielded by the Solver are very close to the documented results, calculated using R language’s nls function, which are:

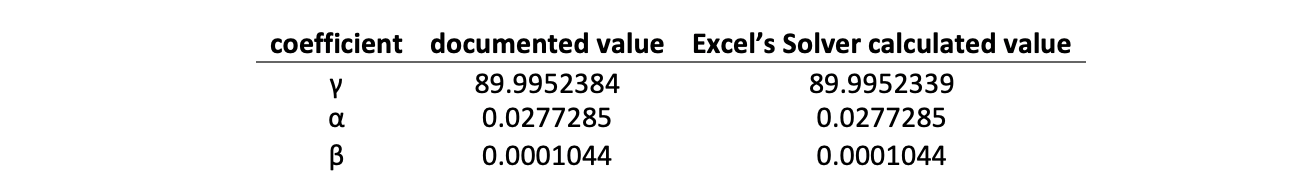

Two other examples can be found in the documentation of the usl R package, in sections “4.1 Case Study: Hardware Scalability” and “4.2 Case Study: Software Scalability”. These examples are provided by the package itself as demo datasets. Following the same steps described above, for the first example (Hardware Scalability), the results are: Note that for this example to reach convergence it might be necessary to add constraints for α≤1 and β≤1. For the second example (Software Scalability), the results are: These three examples show that it is possible to use Excel’s Solver add-in to quicky find values of α, β, γ that fit the USL to a set of performance measurements, with a precision similar to that of available implementations of non-linear least squares regression analysis algorithms. Authors BioPaolo Cavazza - Lead Software Engineer

Paolo joined HCL Software in December 2020 to work with the Workload Automation Test Team as a Performance Tester.

0 Comments

Your comment will be posted after it is approved.

Leave a Reply. |

Archives

July 2024

Categories

All

|

RSS Feed

RSS Feed