|

Let us begin with understanding of Azure what it is all about before moving to our Azure Databricks plugin and how it benefits our workload automation users. “Azure is an open and flexible cloud platform that enables you to quickly build, deploy and manage applications across a global network of Microsoft-managed datacentres. You can build applications using any language, tool, or framework. And you can integrate your public cloud applications with your existing IT environment.” Azure is incredibly flexible, and allows you to use multiple languages, frameworks, and tools to create the customised applications that you need. As a platform, it also allows you to scale applications up with unlimited servers and storage. What is an Azure Databricks? Azure Databricks provides the latest versions of Apache Spark and allows you to seamlessly integrate with open-source libraries. Spin up clusters and build quickly in a fully managed Apache Spark environment with the global scale and availability of Azure. Azure Databricks is a data analytics platform optimized for the Microsoft Azure cloud services platform. Azure Databricks offers three environments for developing data intensive applications: Databricks SQL, Databricks Data Science & Engineering, and Databricks Machine Learning.

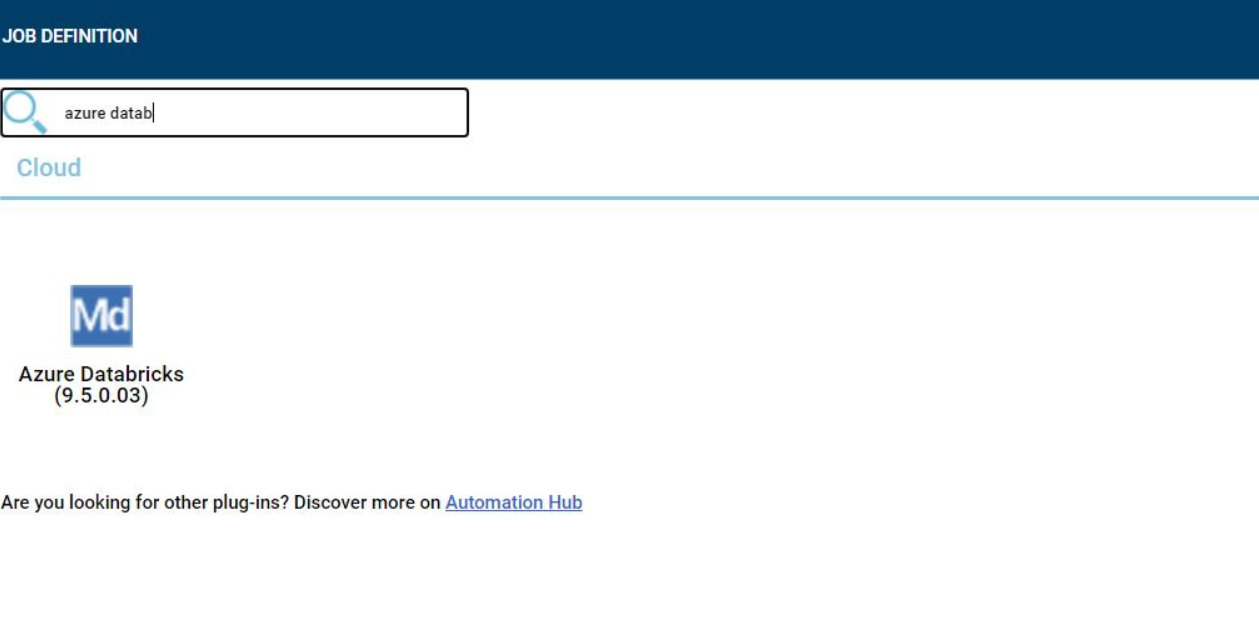

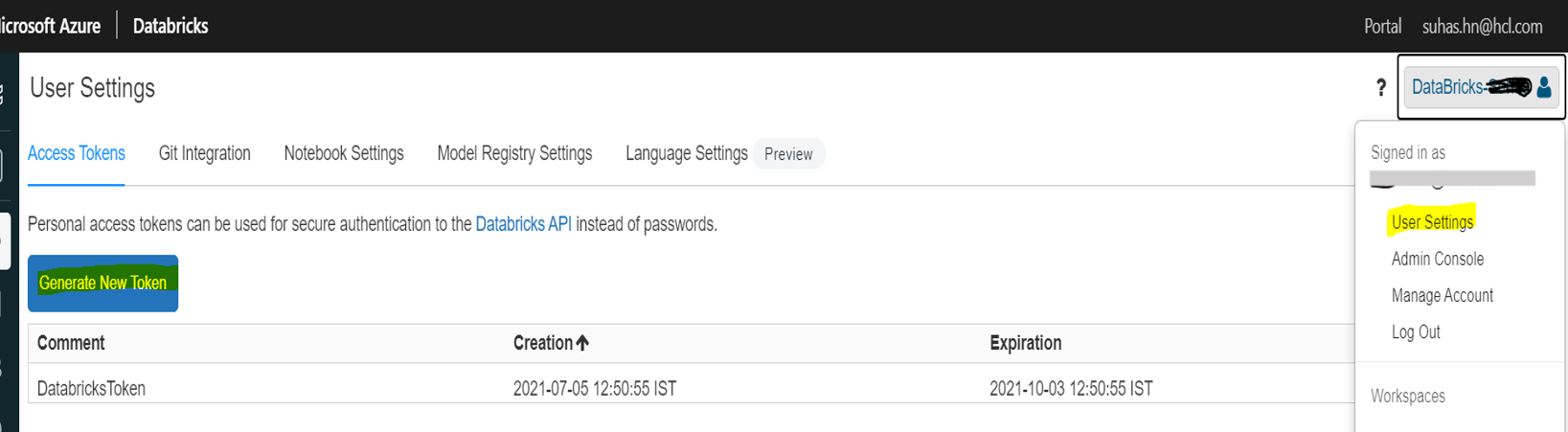

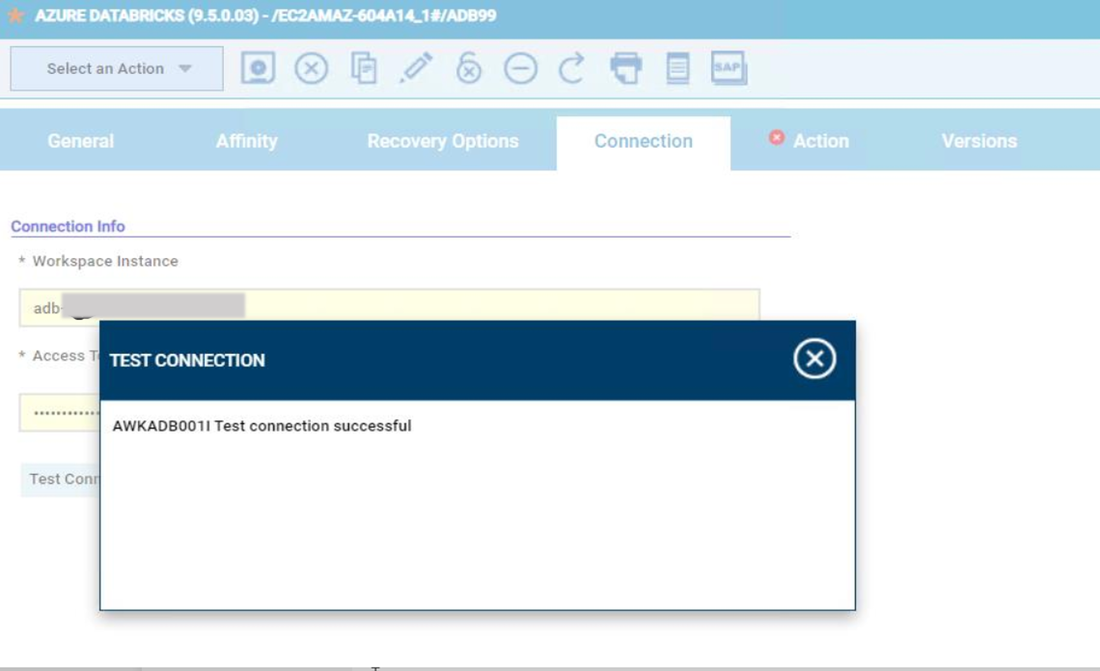

Azure Databricks Plugin Log in to the Dynamic Workload Console and open the Workload Designer. Choose to create a new job and select “Azure Databricks Plugin” job type in the Cloud section. Fig 1 : Job Definition Connection Tab Establishing connection to the Azure Databricks Workspace. Workspace Instance - A unique instance name (per-workspace URL), that is assigned to each Azure Databricks deployment. It is the fully-qualified domain name used to log into your Azure Databricks deployment and make API requests. Example: adb-<workspace-id>.<random-number>.azuredatabricks.net. The workspace ID appears immediately after adb- and before the “dot” (.). For the per-workspace URL https://adb-5555555555555555.19.azuredatabricks.net/ Access Token - Enter the access token that is generated in the Azure cloud, to authenticate to and access Databricks REST APIs. We can generate token under User settings in workspace. Fig 2 Test Connection - Click to verify that the connection to the Azure server works correctly. Fig 3 : Connection Tab Action Tab Use this section to define the operation details. Operation

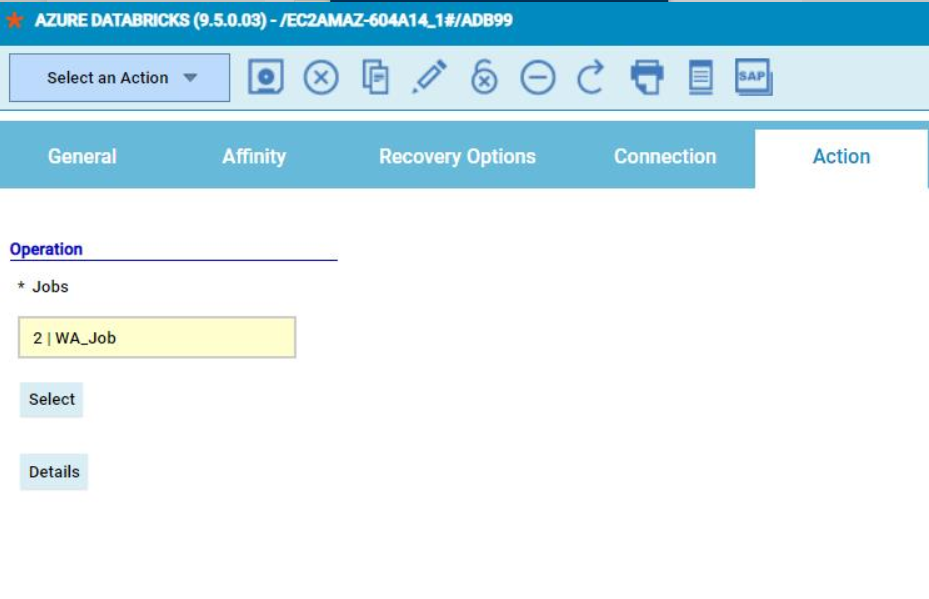

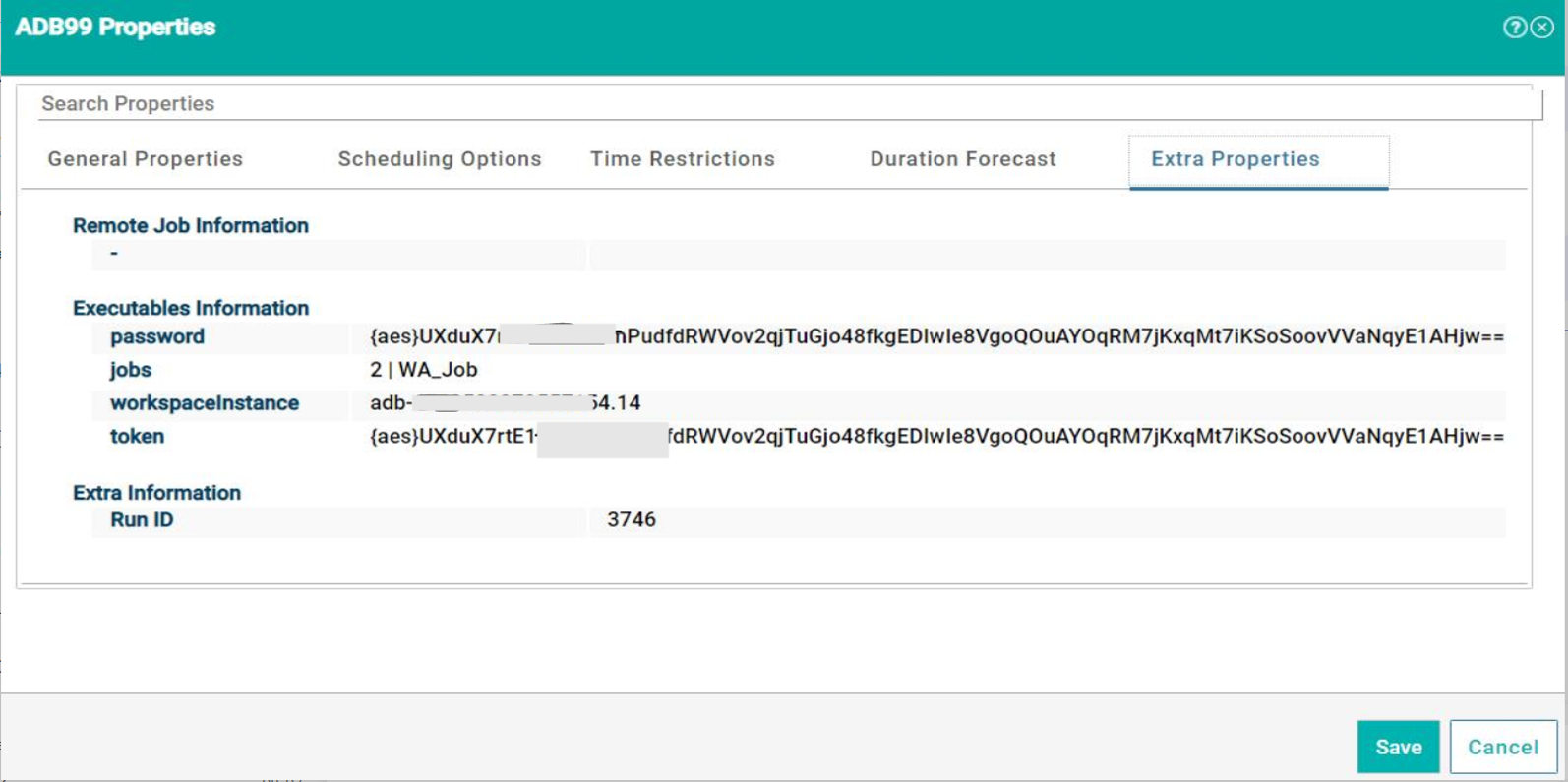

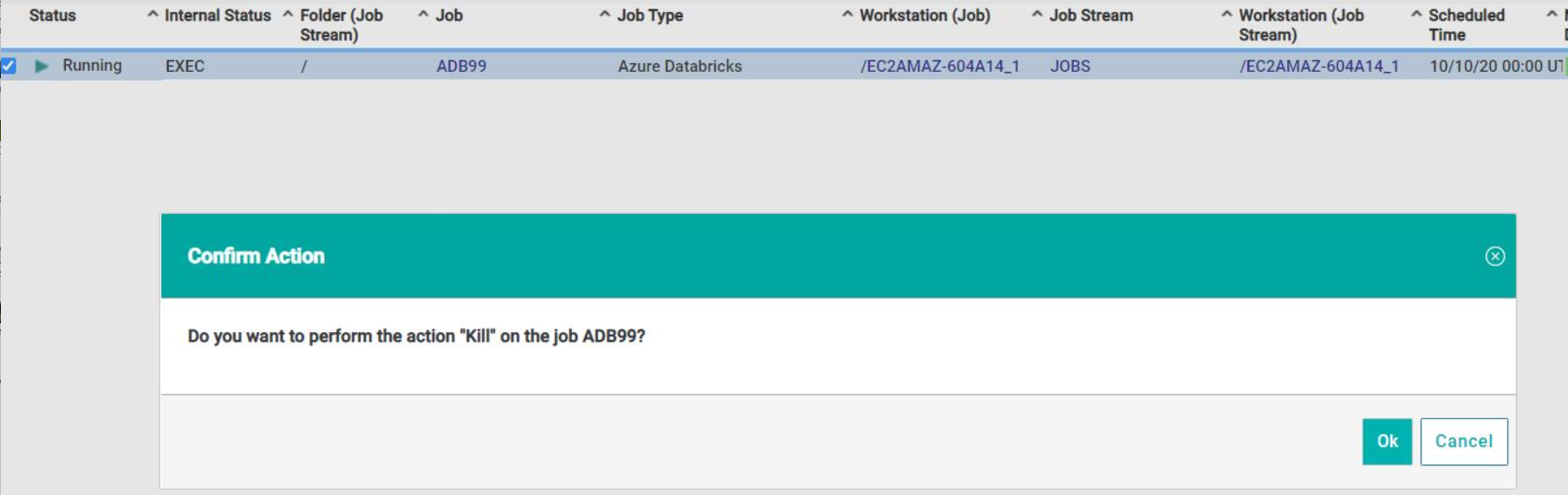

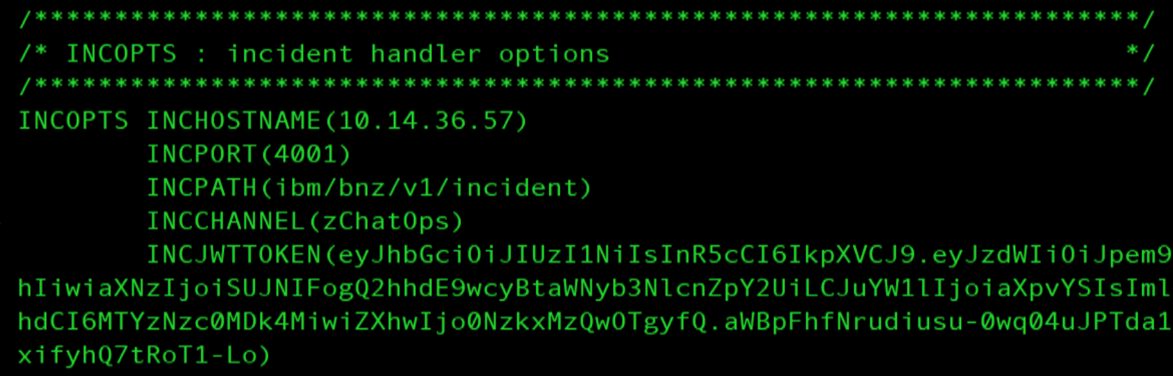

Select – It gives the list of available jobs present in the workspace to select. Details – It gives more information on selected job. Fig 4 : Action Tab Submitting your job It is time to Submit your job into the current plan. You can add your job to the job stream that automates your business process flow. Select the action menu in the top-left corner of the job definition panel and click on Submit Job into Current Plan. A confirmation message is displayed, and you can switch to the Monitoring view to see what is going on. Fig 5 : Monitor page with extra properties Once we submit the job, we can cancel the particular job by Kill option. Fig 6 Job Log Fig 7

0 Comments

Your comment will be posted after it is approved.

Leave a Reply. |

Archives

July 2024

Categories

All

|

RSS Feed

RSS Feed