|

Developing a Web UI Test Automation battery is usually a challenging project. By nature, a graphical interface of any product tends to evolve and change very quickly, not only to offer the users new features, but also to improve the look and feel of the application. The continuous restyling of web pages is typically reflected in unsustainable Web UI Test Automation code maintenance costs: the frequent rework of the developed test scenarios is driven by failures and analyzing the last test automation execution report consists in a “hunt for defects”. Test scenarios are not only difficult to be coded, but they often prove to be false positives, failing because of non-functional reasons but for the fragility with which the tests are developed. This is critical in terms of a substantial loss of confidence in the results of automatic execution. However, our team has invested time and resources in the automatic test, consolidating over the years an approach that is firmly part of our DevOps life-cycle. Different suites daily test the functional aspects of the various flavors of the product, its Rest APIs, its installation procedures and its performance, but it’s also provided a test automation of the Web UI. Automatic scenarios don’t replace manual test (which is fundamental and indispensable) but every day check that the new code does not destabilize the existing functions of the application, repeating boring operations and allowing the team to test the graphical interface in new and more complex scenarios. In this context, Workload Automation 9.5 (whose release is currently planned for 1Q 2019) represents a real challenge due to a complete restructuring of its graphics: a new dashboard will be introduced, the menus will be re-organized, a way to customize the pages will be proposed ... Such radical changes would not have been easily absorbed by the Web UI test automation framework used so far, for a set of reasons:

However, the true added value of the new Web UI Test Automation project based on HCL OneTest UI is the “quality-oriented approach” adopted. Based on the experience of the team, we chose to follow, during the construction of the test scenarios, a set of best practices and conventions to enable important qualities, typical of code development project, but capable of giving value also in a context of test automation one. HCL OneTest UI is the tool that has best responded to this type of needs. BEST PRACTICES / A MODULAR APPROACH REALIZING TEST SCENARIOS HCL OneTest UI is based on the concepts of:

We have decided to apply these two concepts in the following way:

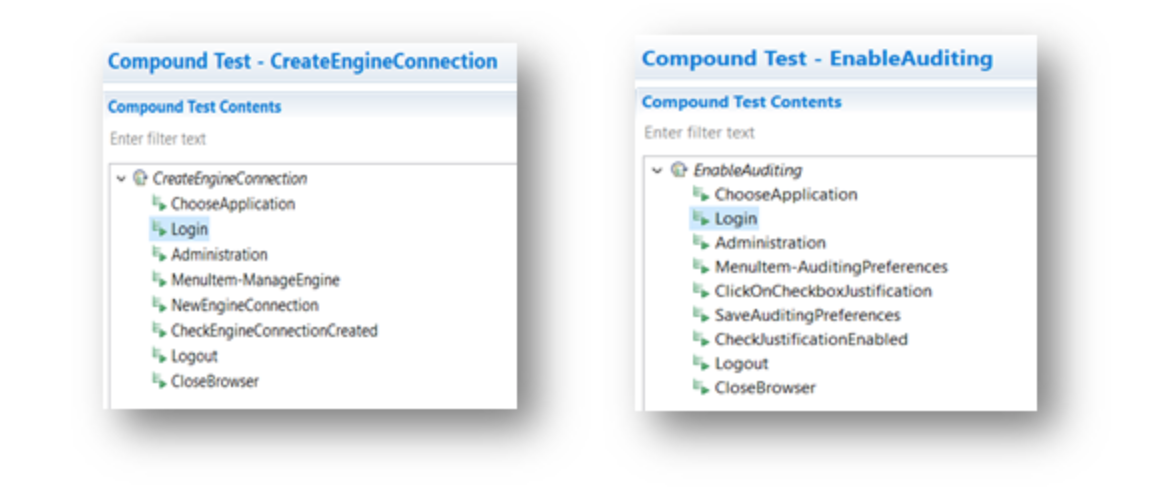

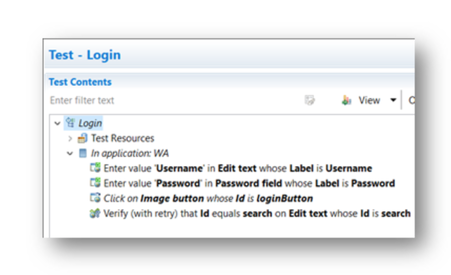

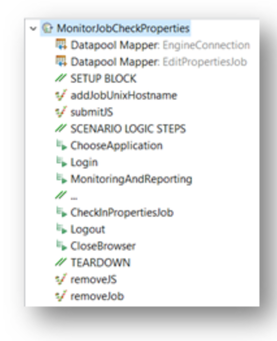

And we were able to achieve an important level of modularity for the Test Automation project. As documented in the following images: The "Login" Test is used in different Compound Tests. This way of structuring the scenarios improves the degree of modifiability of the entire project. In fact, if the login procedure should be modified (or more likely, its graphical interface should be re-styled), the tester should refactor only the "Login" Test and not all the Compound Tests that use it. Then, the tests become reusable even in completely new scenarios. When the project reaches a certain maturity (and in the Test library it is possible to choose from a wide range of already recorded interactions), developing a new scenario consists in composing and reorganizing already available "modules": it will be necessary to record only new interactions, with a substantial reduction in development and code-maintenance time. SELF CONTAINED SCENARIOS Each end-to-end scenario provides (one or more) tests which can verify if a particular functionality, object of the test, is working in the expected way. Each scenario is designed to be minimal, therefore corresponding to a single behavior to be tested. Regardless of its outcome (of success or failure) each test is self-contained: its eventual failure does not depend on the outcome of the previous tests, nor its result does not impact the execution of the subsequent tests. It’s a good practice to structure each end-to-end scenario, placing a block of setup (in which the test scenario is prepared) and concluding the scenario with a set of teardown procedures (with which it’s ensured, regardless of the results of the test, the resulting environment is identical to the existing one before execution). To implement this concept in HCL OneTest UI we used:

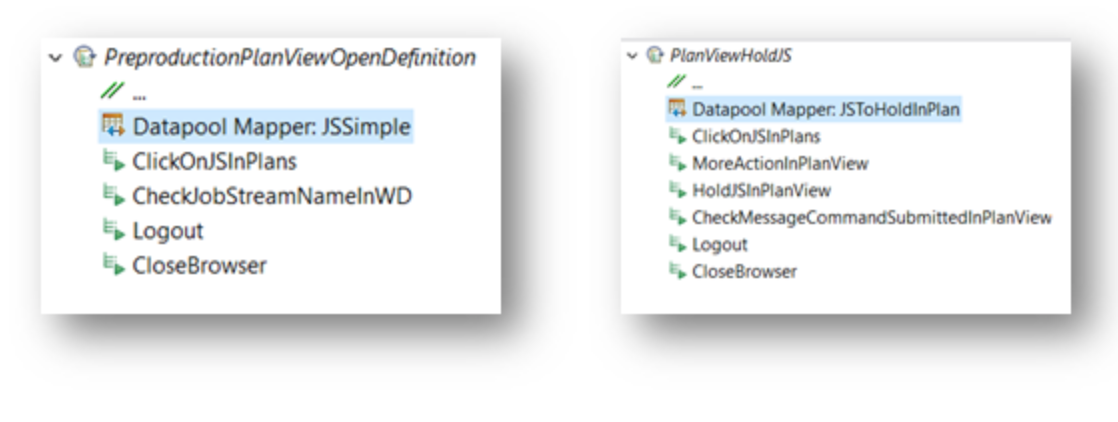

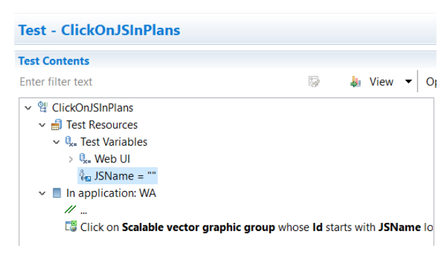

In compliance with these best practices, each scenario can be executed at any time, without having to respect any rigid order. Debugging failed scenarios also turned out to be simpler and faster. DATA DRIVEN TESTING HCL OneTest UI allows to store data in Datapool, tables whose values can be used during the execution of the scenarios. It’s evident that data can be used for checks (and then used by Verification Points). For example, in our case, it was necessary to verify that the object initialized with the Rest APIs was correctly displayed on the Web page. It’s less evident how this functionality can have implications also in terms of quality. By structuring the tests to make them exposing variables, it is possible to generalize different interactions in a single test: it is sufficient to adequately initialize the variables within the Compound Test to change their behavior. An example of this use of the Datapool could be the following. In 2 scenarios, the same Test is used to click on 2 different "objects" basing the interaction on the value with which the JSName variable is initialized: The benefits are relevant:

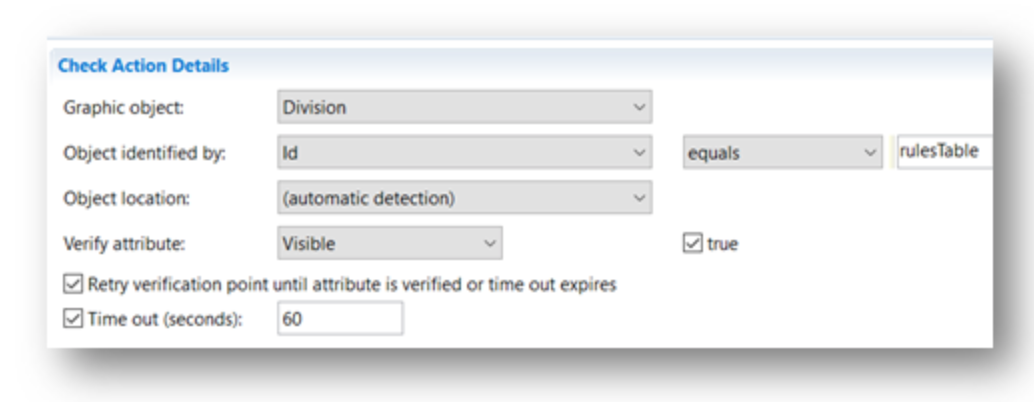

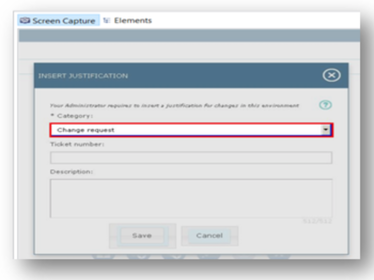

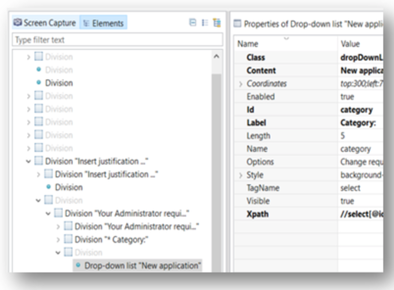

LIMIT THE USE OF DELAYS A concrete problem of Web UI testing is that the interactions with the elements used during any scenario are never instantaneous: this means that after clicking on a button, after closing a pop-up or simply after changing the active page the effects of the actions cannot be verified immediately. It is evident that in an automatic execution context this problem is even more decisive. Since the update time of the page is not predictable a priori (it may depend on countless factors, such as network delay, the load status of the machine at a given time, the complexity of the calculation that must be performed ...), using delay with a predefined waiting time is a highly-discouraged practice to solve this type of problem. Once again, the solution we adopted is based on Verification Points. Using them in a clever way, the tester can force HCL OneTest UI to wait for a certain element to be visible on the page: Obviously, the element must be significant in terms of completing the operation and the maximum time (chosen as a timeout beyond which the action must be considered as failed) should be chosen in a pessimistic way. It is important to remember that the use of delays must not be banned at all: there are situations in which forcing the execution of the test to stop for a fixed amount of time is essential. In our case, for example, it is needed to wait some time for the WA engine to complete operations on the objects it manages before monitoring their status with the Web UI. ELEMENT IDENTIFICATION STRATEGY The solidity of test automation scenarios depends strictly on the way the elements are identified by the automation tool. We’ve limited the impact of Web UI rework, basing our identification strategy on the ID (or the “class”) of the HTML elements in the page. In this way, even when the page layout is reconfigured, the test scenarios continue to work. Only in the rare cases in which the developers choose to change the ID of the elements, the execution of the tests shows false positives and requires to be reworked. To support this kind of approach, HCL OneTest UI offers 2 ways to browse the objects registered on the page:

Once the object identification procedure has been chosen, in the screen capture view the tool uses:

CODE ORGANIZATION HCL OneTest UI gives the tester the possibility to organize artifacts in directories. At first use, this procedure may seem like only a way to distinguish between several types of objects: Compound tests, Tests, Datapools ... But this functionality can concretely increase reusability of the already registered tests (thus avoiding the duplication of the code). We have chosen to organize the artifacts closely following the different functional areas of our product: administration, design, monitoring, reporting ... We have grouped the tests from a logical point of view: interactions with Jobs, Job Stream, Calendars… The result is a structured and navigable project that facilitates the identification of available tests (also thanks to the choice of "speaking" names). PORTABILITY OF THE PROJECT The quality of a test automation project also depends on the simplicity with which the execution:

In this regard, it was necessary to consolidate few modification points, to prefer configuration files to hard-coded string and to structure the execution on a parameterized and flexible Jenkins-based architecture. However, these aspects deserve to be treated and analyzed separately in a different blog. CONCLUSIONS With this approach, in the last 4 months we were able to finalize the porting of the entire automatic suite implemented in the years with the framework adopted so far. Moreover, HCL OneTest UI helped us to achieve 35% increase of the suite’s coverage with new test scenarios. With more than 600 testsuites already recorded, the team has a stable library of basic operations: at operating speed a new scenario can be added to the suite in the order of hours. The suite has been implemented to be executed on the different flavors of the Web UI with low configuration cost. At the moment, the Web UI Automation Project counts 175 end-to-end scenarios and it runs daily as soon as the latest build of product is ready. So far, the automation process highlighted more than 40 defects during the development cycle of the product, and it will be really helpful in the next future while the team will be involved in the SVT activities.

0 Comments

Your comment will be posted after it is approved.

Leave a Reply. |

Archives

July 2024

Categories

All

|

RSS Feed

RSS Feed